Old people’s opinions are often the result of failing biological hardware, or wetware if you prefer. He should focus on anti-aging instead of forcing people to use comical tools used to make farting fat men for anything serious or important.

Maybe he should fly on an AI piloted airplane until it lands right.

Honestly at this point I could kill these rich old fucks with my bare hands and feel nothing.

Not even a little bit of happiness? 😁

urged workers to keep relying on AI tools even when they fall short. If AI does not yet work for a particular task, he said, employees should “use it until it does”

My honest reaction:

“I promise you, you will have work to do.”

Usually thats slang for, get ready to be laid off.

Nooo Not my Stock Portfolio!!! --Jensen Huang probably

He is the insane one

Typical CEOs that have no idea what the fuck AI actually is that just want you to use ai.

Jensen is actually pretty savvy, he knows exactly what it is and what’s its purpose.

It’s whatever runs on Nvidia chips, and it’s purpose is to sell Nvidia chips.Yeah. It’s just the current band wagon he jumped on. Crypto was the previous one and gaming was the one before that.

Anything to sell more chips.

Seen like that it did help destroy the video game market. But with gamers cheering it on.

Diablo 2, C&C, Red alert, Commandos, Point&Click, … the best games are not about 3D graphics, fight me!

Thankfully indie game devs haven’t forgotten that 2D is the best and good stories matter.

A shame there are no AAA games though. In the original sense. I bet it would work too but no, microtransactions and dark patterns it is.

Billionaire… For now.

If Nvidia tanks he’ll be a measly hunded-millionaire.

P.s. large margin of error I have not deeply analysed Jensen Wang’s stock holdings for a shitpost.

How fucking accurate was Silicon Valley? Huang maight be in the Dos Commas club soon.

That’s a pretty low net worth considering Nvidia’s market cap.

I assumed he could afford to start Jurassic Park for real by now, purely to skin a T-rex for his jacket collection.

T-Rex scapula spatula.

Guess his golden parachute isn’t quite ready yet.

If AI does not yet work for a particular task, he said, employees should “use it until it does”

Uh, using the AI doesn’t train the AI, bud.

I think the lesson Jensen is pushing here is “use it until you learn to stop complaining about it”

Well that logic perfectly explains why some people keep making AI generated content even when others say they don’t want it, keep pushing slop until we do!

Genuinely, this is the driving misconception people have about AIs right now. That somehow everybody using them is making them smarter, when really it’s leading to model collapse

Can you help correct this for me? Don’t you feed them valuable training data and exposure to real world problems in the process of using them?

No. AI models are pre-trained, they do not learn on the fly. They are hoping to discover General Artificial Intelligence, which is what you are describing. The problem is that they don’t even understand exactly how training even works. While engineers understand the overall architecture, the specific “reasoning” or decision-making pathways within the model are too complex to fully interpret, leading to a gap between how it works and why it makes a particular decision.

My assumption wasn’t that they learned on the fly, it was that they were trained on previous interactions. Eg the team developing them would use data collected from interaction with model v3 to train model v4. Seems like juicy relevant data they wouldn’t even have to go steal and sort

That’s true to an extend, but the interactions are only useful for training if you can mark it as good / bad etc (which is why sometimes apps will ask you if they were useful). But the ‘best’ training data like professional programming etc is usually sold at a premium tier with a promise not to use your data for training (since corporations don’t want their secrets getting out).

You can’t train ai on ai output. It causes degradation on the newly trained model.

First: that’s wrong, every big LLM uses some data cleaned/synthesized by previous LLMs. You can’t solely train on such data without degradation, but that’s not the claim.

Second: AI providers very explicitly use user data for training, both prompts and response feedback. There’s a reason businesses pay extra to NOT have their data used for training.

yep ai training on ai is totally making things better…

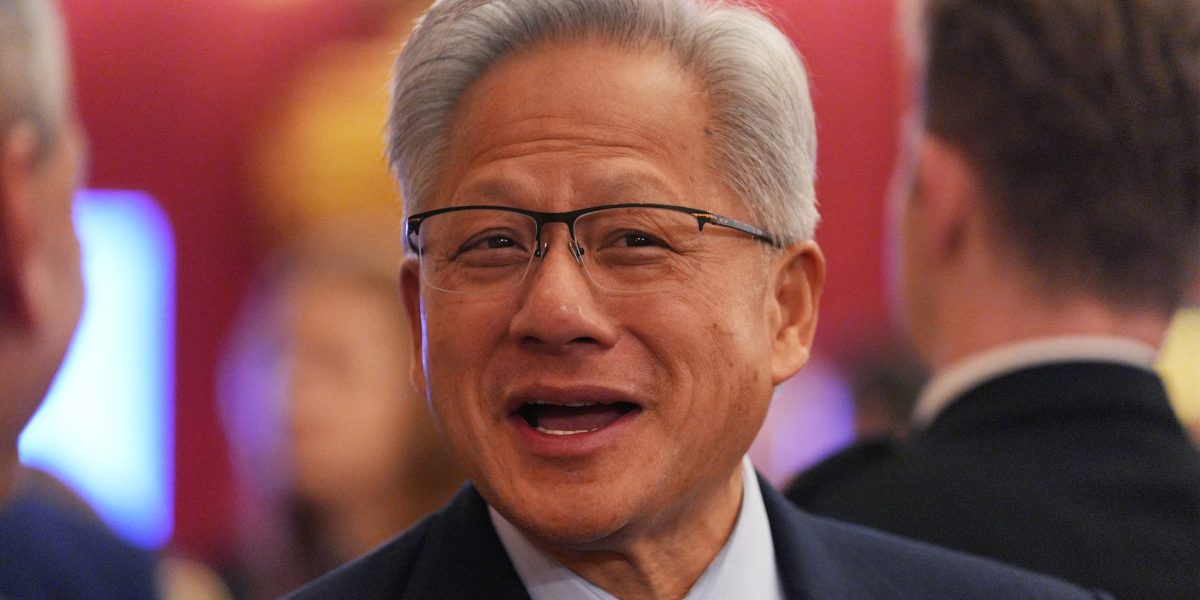

It wasn’t that long when Jensen was just the funny guy who’d come out and announced a bunch of cards and say some wacky shit, everyone outside of the tech press would ignore him and that was that.

Kinda miss those days

I wouldn’t wish Billionaire’s Disease on my worst enemy.

Miss the days where he didn’t exist.

He’s asian Gavin Belson. I love his live demos where nothing works.

Were you even alive then?

No, but I will be.

Exactly timing depending on guillotine availability

if he wants to prop this bubble up he needs to sign more titties

I would not be surprised in the slightest if the cause of Nvidia drivers being absolute dogshit lately is because this absolute fucking MORON is forcing the devs to use AI to code the DRIVERS. THE ONE THING YOU DO NOT WANT FUCKING AI MEDDLING WITH.

If Microsoft did it with Windows 11 (which resulted in various SSD failures for a bunch of people), I have no doubt in my mind that Nvidia does it too.

THE ONE THING YOU DO NOT WANT FUCKING AI MEDDLING WITH

I mean… I can think of a lot more than just that one thing

…yeah, that list is way too extensive to mention, but I also said that because if you are programming something that literally makes your hardware be understood by the operating system, it should not have coding that is not created by a human.

Yeah I stopped updating ym drivers when I had several times an update that just broke everything. Fuck that I will stick with a version I know works

What is up with the drivers?

In a word - instability. Downgrading driver versions for Nvidia cards is not an uncommon troubleshooting step now, which is not ideal.

AMD had poor drivers because they were inexperienced

Nvidia has poor drivers because they’re shoving AI in all their holes

That’s binary blob drivers for you, you just try different versions and hope it gets better someday.

One of the big advantages to open source drivers is that you can do a bisect to track some new breakage back to a specific patch. Sure, most people don’t know how to do that, but there’s a lot of people who can. And then the problem gets fixed for everybody.

The most recent Linux driver limits the resolution of my HDMI output to 1080p for a 2k res monitor

But 2k is 1080p

“I want every task that is possible to be automated with artificial intelligence to be automated with artificial intelligence,” he added. “I promise you, you will have work to do.”

I think every task that can be automated with LLMs is already automated. It’s just that it’s a very short list.

Among them CEOs and some politicians.

While I agree CEOs don’t do much work. We still need a person there, because code can’t be held accoun- nevermind, that ship has long sailed.

“A computer can never be held accountable. Therefore a computer must never make a management decision.”

- IBM motto, 1979

Although at this point our computing philosophies from that era are ancient history according to these shortsighted people.

Moment of Irony:

For this story, Fortune used generative AI to help with an initial draft. An editor verified the accuracy of the information before publishing.

🫠

Ok but that’s peak AI-use isn’t it? Have AI give you the rough draft, verify what needs verifying, done. If the information is correct I don’t care who wrote it.

AInception

“I want every task that is possible to be automated with artificial intelligence to be automated with artificial intelligence,” he added. “I promise you, you will have work to do.”

Well, yeah. Obviously, someone is going to have to unfuck everything AI fucked up…so, in a way, using AI is kind of like adding a layer of job security to your job.

Reminds of when companies offshored their whole dev team and just sent requirements to them thinking they’d make code cheaper.

Reminds of when companies offshored their whole dev team and just sent requirements to them thinking they’d make code cheaper.

I mean, it was cheaper. It’s just that it was also awful. It was basically like firing all your senior devs and giving their work to randos who can’t code, but with plausible deniability.

Yes it was cheaper, even after they had to re-hire the folks they fired so they could fix the code they sent to a dev farm overseas with just a vague set of requirements and no oversight.

But the same sort of attitude is happening with AI, like the code it’s making won’t require review and testing.