Need to let loose a primal scream without collecting footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Semi-obligatory thanks to @dgerard for starting this)

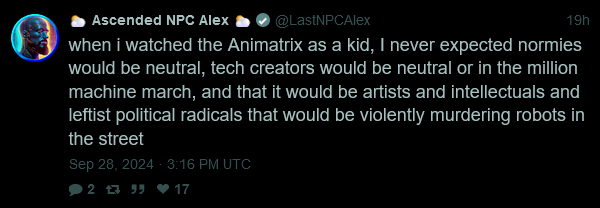

Saw an unexpected Animatrix reference on Twitter today - and from an unrepentant promptfondler, no less:

This ended up starting a lengthy argument with an “AI researcher” (read: promptfondler with delusions of intelligence), which you can read if you wanna torture yourself.

yes. that’s all true, but academics and artists and leftists are actually calling for Buttlerian jihad all the time. when push comes to shove they will ally with fascists on AI

This guy severely underestimates my capacity for being against multiple things at the same time.

The type of guy who was totally convinced by the ‘but what if the AI needs to generate slurs to stop the nukes?’ argument.

Guy invented a new way to misinterpret the matrix, nice. Was getting tired of all the pilltalk

People are “blatantly stealing my work,” AI artist complains

When Jason Allen submitted his bombastically named Théâtre D’opéra Spatial to the US Copyright Office, they weren’t so easily fooled as the judges back in Colorado. It was decided that the image could not be copyrighted in its entirety because, as an AI-generated image, it lacked the essential element of “human authorship". The office decided that, at best, Allen could copyright specific parts of the piece that he worked on himself in Photoshop.

“The Copyright Office’s refusal to register Theatre D’Opera Spatial has put me in a terrible position, with no recourse against others who are blatantly and repeatedly stealing my work without compensation or credit.” If something about that argument rings strangely familiar, it might be due to the various groups of artists suing the developers of AI image generators for using their work as training data without permission.

and now when somebody will generate exactly the same thing, it won’t be new stuff either el reg: Hipster whines at tech mag for using his pic to imply hipsters look the same, discovers pic was of an entirely different hipster

Appropriately for this sort of meaningless bilge, the name is also bullshit. The way to say “space opera” in French is “space opera”.

“Space opera’s the same, but they call it le space opera.”

It’s been a long time since I lived in France, so my sense of what is idiomatic has no doubt grown rusty, but “Théâtre D’opéra” doesn’t sound right. The word “Théâtre” doesn’t belong in a reference to the place where operas are performed. It’s “L’opéra Garnier” and “L’opéra Bastille” in Paris and “L’opéra Nouvel” in Lyon, for example. I’d read “théâtre d’opéra” as more like “operatic theatre” in the sense of a genre (contrasted with, e.g., spoken-word theatre). I could be completely wrong here, but the title feels like a naive machine translation.

That’s absolutely right.

Not a sneer, but a pretty solid piece on the slop-nami: Drowning in Slop, by Max Read

Twitter — Elon Musk’s X — may be the most fruitful platform for this kind of search thanks to its sub-competent moderation services.

Zing.

HN seems to be particularly deranged today, doesn’t it?

It mostly seems to be a mopey debate over whether Saltman’s impending apotheosis is good or bad.

I think they’ve been pretty sane AI-wise, lately.

you sure about that?

The most depressing thing for me is the feeling that I simply cannot trust anything that has been written in the past 2 years or so and up until the day that I die. It’s not so much that I think people have used AI, but that I know they have with a high degree of certainty, and this certainty is converging to 100%, simply because there is no way it will not. If you write regularly and you’re not using AI, you simply cannot keep up with the competition. You’re out. And the growing consensus is “why shouldn’t you?”, there is no escape from that.

This is someone who literally can’t tell good writing from bad, so he assumes everyone is using AI

He’s so close to being depressed enough to maybe ask a vital and important question about meaning and his own relationships with technology. But probably he’ll just buy more AI.

Watching AI guys slowly come around feels similar to how people have to care for their alcoholic relatives. Folks have to come to the point where they recognize where the unacceptable bullshit lies on their own, then you can show them the cold hard facts and a path back to the real world, but getting there is absolutely exhausting and often heartbreaking.

Our anti-AI milita will be called “The Artists’ Rifles”

Sam Altman says taking psychedelics ‘significantly changed’ his mindset

Altman being a druggie would go some way to explaining his utter disconnect from reality and utter lack of moral fibre

True believers at Vox’ Future Perfect “vertical” let out a hearfelt REEEEEE as Saltman makes the obvious move to secure all the profits

https://www.vox.com/future-perfect/374275/openai-just-sold-you-out

The Zitron-pilled among us probably suspect that part of the real reason for this is, ironically, to obscure the fact that OpenAI has no real profits because of how ludicrously expensive their models are to train and operate and how limited the actual use cases that people will pay for have proven. It’s better from a “getting investor money” perspective to have everyone talking about how terrible it is that investor profits are no longer capped for humanitarian reasons than to have more people ask whether we’re getting close to the peak of this bubble.

this is so fucking funny. bro nobody but you is surprised

capitalist: does capitalism

liberal: Ah fuck, I can’t believe you’ve done this.

You think capitalists would do that? Go in front of the government and tell lies?

E: this is also my feeling about Altman saying ‘I did drugs and I changed bro’.

I changed*

*I decided to get a blood boy!!!

Turns out those so called “wallet inspectors” just want your money!

Governor Newsom, are you seeing this?

Congress, are you seeing this?

World, are you seeing this??

Expanding on that, part of me feels Altman is gonna find all the rhetoric he made about “AI doom” being used against him in the future - man’s given the true believers reason to believe he’d commit omnicide-via-spicy-autocomplete for a quick buck.

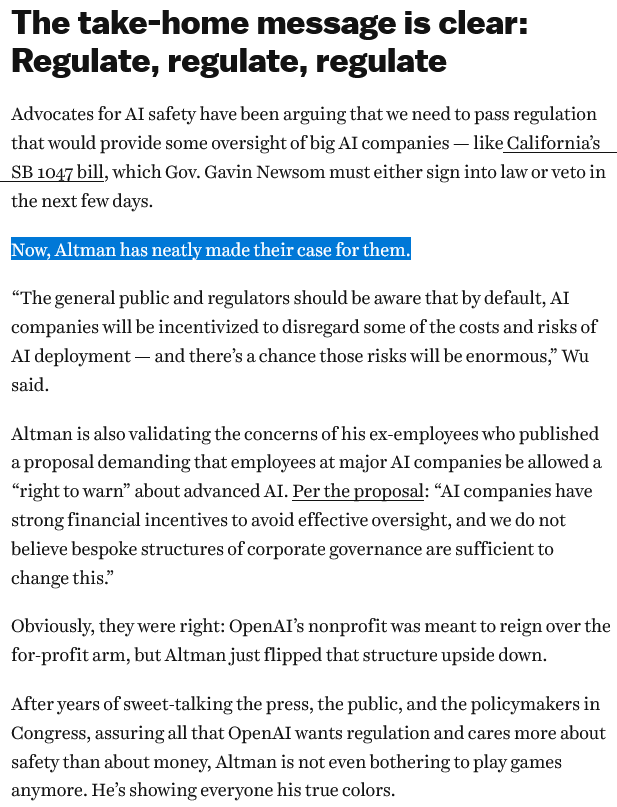

Hell, the true believers who made this pretty explicitly pointed out Altman’s made arguing for regulation a lot easier:

shocked that scorpions in a scorpion’s nest funded by their scorpion mates might have fallen into stinging

a most shockedpikachu article, I love it

But the move has some observers — including Musk himself — asking: How could this possibly be legal?

Because the nonprofit is there to represent the public, this would effectively mean shifting billions away from people like you and me. As some are noting, it feels a lot like theft.

it continues to be astounding how gullible some people can be (/choose to stay?)

a nsfw found in the wild

Found a good one in the wild

Didn’t you know LLM stood for Limited Liability Machine

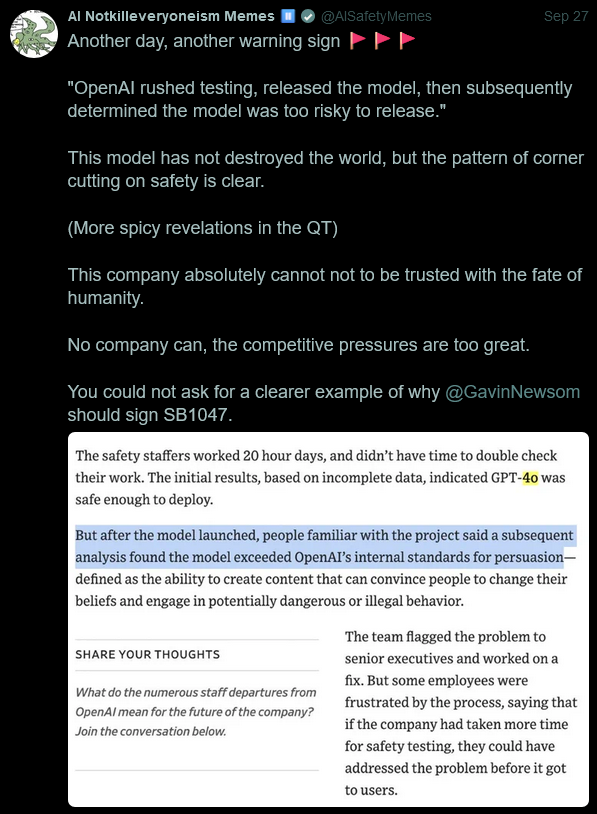

I vaguely remember mentioning this AI doomer before, but I ended up seeing him openly stating his support for SB 1047 whilst quote-tweeting a guy talking about OpenAI’s current shitshow:

I’ve had this take multiple times before, but now I feel pretty convinced the “AI doom/AI safety” criti-hype is going to end up being a major double-edged sword for the AI industry.

The industry’s publicly and repeatedly hyped up this idea that they’re developing something so advanced/so intelligent that it could potentially cause humanity to get turned into paperclips if something went wrong. Whilst they’ve succeeded in getting a lot of people to buy this idea, they’re now facing the problem that people don’t trust them to use their supposedly world-ending tech responsibly.

Isn’t the primary reason why people are so powerful persuaded by this technology, because they’re constantly sworn to that if they don’t use its answers they will have their life’s work and dignity removed from them? Like how many are in the control group where they persuade people with a gun to their head?

Was salivating all weekend waiting for this to drop, from Subbarao Kambhampati’s group:

Ladies and gentlemen, we have achieved block stacking abilities. It is a straight shot from here to cold fusion! … unfortunately, there is a minor caveat:

Looks like performance drops like a rock as number of steps required increases…

correct me if I’m reading this wrong — the results are that LLMs are much, much worse than classical AI at planning block placement for SHRDLU? that seems pretty damning

Yes, the classical algo achieves perfect accuracy and is way faster. There is also a table that shows the cost of running o1 is enormous. Like comically bad. Boil a small ocean bad. We’ll just 10x the size and it will achieve 15 steps inshallah.

Imo, this is like the same behavior we see on math problems. More steps it takes, the higher the chance it just decoheres completely. I can’t see any reason why this type of thing would just “click” for the models if they are also unable to do multiplication.

I mean this just reeks of pure hopium from OAI and co that things will magykly work out. (But the newer model is clearly better^{tm}! I still don’t see any indication that one day that chart is just going to be 100s across the board.)

Large Reasoning Models

May the coiners of this jargon step on Lego until the end of days

Somehow I managed to mention the wordpress lawsuit on last week’s thread instead of this one, so let’s try again.

Matt Mullenweg, the wordpress(.)com guy and current owner of tumblr, tried to shakedown competing blog product WP engine (which builds on the same open source software that his company does) for 8% of their revenue (https://goblin.band/notes/9yjrc2logimd1tr3 h/t to froztbyte who was also on the old thread for some mysterious reason) or he’d say mean things about them at a conference where they were one of the sponsors. And they didn’t pay up, so he compared them to cancer.

And now they’re suing him.

https://notes.ghed.in/posts/2024/matt-mullenweg-wp-engine-debacle/

Mullenweg’s the same guy who publicly harassed a random transwoman on Tumblr and had a general meltdown to the point where Tumblr staff had to distance themselves from him, so I’m not shocked. (EDIT: Somehow used the singular masc pronoun for the entirety of Tumblr staff - don’t ask me how)

(That its Tumblr is the only thing that shocks me - you’d think he’d have realised its queer-friendly rep was one of the main things going for it)

A lobsters states the following in regard to LLMs being used in medical diagnoses:

If you have very unusual symptoms, for example, there’s a higher chance that the LLM will determine that they are outside of the probability space allowed and replace them with something more common.

Another one opines:

Don’t humans and in particular doctors do precisely that? This may be anecdotal, but I know countless stories of people being misdiagnosed because doctors just assumed the cause to be the most common thing they diagnose. It is not obvious to me that LLMs exhibit this particular misjudgement more than humans. In fact, it is likely that LLMs know rare diseases and symptoms much better than human doctors. LLMs also have way more time to listen and think.

<Babbage about UK parlaimentarians.gif>

Also please fill in the obligatory rant about how LLMs don’t actually know any diseases or symptoms. Like, if your training data was collected before 2020 you wouldn’t have a single COVID case, but if you started collecting in 2020 you’d have a system that spat out COVID to a disproportionately large fraction of respiratory symptoms (and probably several tummy aches and broken arms too, just for good measure).

nothing hits worse than an able-bodied techbro imagining what medical care must be like for someone who needs it. here, let me save you from the possibility of misdiagnosis by building and mandating the use of the misdiagnosis machine

ya know that inane SITUATIONAL AWARENESS paper that the ex-OpenAI guy posted, which is basically a window into what the most fully committed OpenAI doom cultists actually believe?

yeah, Ivanka Trump just tweeted it

But right now, there are perhaps a few hundred people, most of them in San Francisco and the AI labs, that have situational awareness. Through whatever peculiar forces of fate, I have found myself amongst them.

oh boy

barron: shows ivanka the minecraft speedrun

ivanka: I HAVE SEEN THE LIGHT, BROTHER

edit: accidentally read ivanka as melania, now corrected

Personally, I was radicalized by ‘watch for rolling rocks’ in .5 A presses

parallel universes were the inspiration behind urbit, it all makes sense

Inventor sez “I locked myself in my apartment for 4 years to build this humanoid”. Surprisingly, not a sexbot!

Surprisingly, not a sexbot!

Well, not with that attitude

not a sexbot!

Skill issue.

You know that’s for Gen 2.0

This tech curve is about to go exponential, if you know what I’m sayin’

–venture capitalists, probably

all hail the hockey stick may we forever outspend all competition and reap the rewards of a ravaged market we solely control