But will it show Elon’s baby weenie?

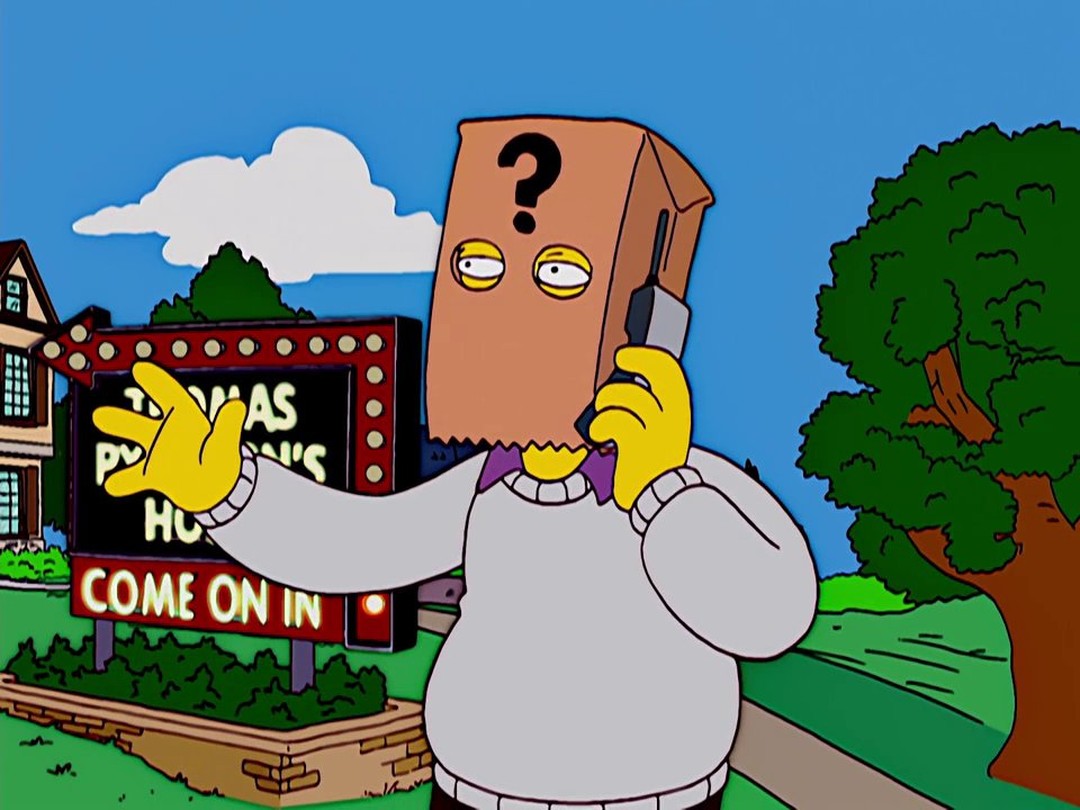

Why is the headline putting the blame on an inanimate program? If those X posters had used Photoshop to do this, the headline would not be “Photoshop edited Good’s body…”

Controlling the bot with a natural-language interface does not mean the bot has agency.

I don’t know the specifics for this reported case, and I’m not interested in learning them, but I know part of the controversy with the grok deep fake thing when it first became a big story was that Grok was starting to add risqué elements to prompted pictures even when the prompt didn’t ask for them. But yeah, if users are giving shitty prompts (and I’m sure too many are), they are equally at fault with Grok’s devs/designers who did not put in safeguards to prevent those prompts from being actionable before releasing it to the public

deleted by creator

PREDATORS used Grok to deepfake…

A predator put out grok.

Sure, and that’s bad too.

But Elon isn’t making those pervs do pervy things. He’s just making it easier.

Guns don’t kill people, etc etc…

Right, because they pull their own triggers, etc., etc…

Edit: To clarify to make absolutely certain you can’t possibly think I’m agreeing with you, I’m not complaining about Grok, although chatbot “AI” are stupid fucking tools for tools, I’m comlaining about the PREDATORS who use chatbots to do sexual deviancy shit. Not unlike the ammosexuals/ICE agents who fantasize about using their ARs to kill women.

I guess I misinterpreted your comment. I thought you were trying to imply that all of the responsibility rested on the badly-behaving users and that the makers of Grok had no responsibility to prevent sexual harassment and CSAM on their platform.

The makers of Grok are responsible for all of its sins, yes.

But mostly I was referring to the men who use software like this for those purposes.

But this is nothing new. I’m old enough that I predate even using Photoshop for things like this.

No, the problem is always perverted men.

And for the incels, no, women don’t do shit like that.

“We take action against illegal content on X, including Child Sexual Abuse Material (CSAM), by removing it, permanently suspending accounts, and working with local governments and law enforcement as necessary,” X’s “Safety” account claimed that same day.

It really sucks they can make users ultimately responsible.

And yet they leave unfettered access to the tool that makes it possible for predators to do such vile shit.

In terms of how this is reported, at what point does this become streisanding by proxy? I think anything from the Melon deserves to be scrutinized and called out for missteps and mistakes. At this point, I personally don’t mind if the media is overly critical about any of that because of his behavior. And what I’m reading about Grok is terrible and makes me glad I left Twitter after he bought it. At the same time, these “put X in a bikini” headlines must be drawing creeps towards Grok in droves. It’s ideal marketing to get them interested. Maybe there isn’t a way to shine the necessary light on this that doesn’t also attract the moths. I just think in about ten years’ time we will get a lot of “I started undressing people against their will on Grok and then got hooked” defenses in court rooms. And I wonder if there would’ve been a way to report on it without causing more harm at the same time.