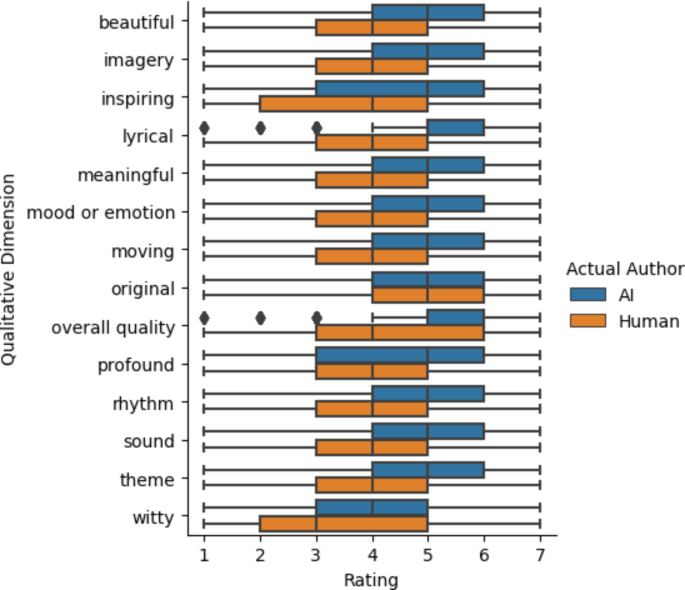

As AI-generated text continues to evolve, distinguishing it from human-authored content has become increasingly difficult. This study examined whether non-expert readers could reliably differentiate between AI-generated poems and those written by well-known human poets. We conducted two experiments with non-expert poetry readers and found that participants performed below chance levels in identifying AI-generated poems (46.6% accuracy, χ2(1, N = 16,340) = 75.13, p < 0.0001). Notably, participants were more likely to judge AI-generated poems as human-authored than actual human-authored poems (χ2(2, N = 16,340) = 247.04, p < 0.0001). We found that AI-generated poems were rated more favorably in qualities such as rhythm and beauty, and that this contributed to their mistaken identification as human-authored. Our findings suggest that participants employed shared yet flawed heuristics to differentiate AI from human poetry: the simplicity of AI-generated poems may be easier for non-experts to understand, leading them to prefer AI-generated poetry and misinterpret the complexity of human poems as incoherence generated by AI.

If you have to be in the club to see it, I can’t help but wonder if it’s not there.

Like, it could be that I just don’t get it, but until I see actual blind tests it’s suspicious.