I think AI is neat.

They’re kind of right. LLMs are not general intelligence and there’s not much evidence to suggest that LLMs will lead to general intelligence. A lot of the hype around AI is manufactured by VCs and companies that stand to make a lot of money off of the AI branding/hype.

Yeah this sounds about right. What was OP implying I’m a bit lost?

I believe they were implying that a lot of the people who say “it’s not real AI it’s just an LLM” are simply parroting what they’ve heard.

Which is a fair point, because AI has never meant “general AI”, it’s an umbrella term for a wide variety of intelligence like tasks as performed by computers.

Autocorrect on your phone is a type of AI, because it compares what words you type against a database of known words, compares what you typed to those words via a “typo distance”, and adds new words to it’s database when you overrule it so it doesn’t make the same mistake.It’s like saying a motorcycle isn’t a real vehicle because a real vehicle has two wings, a roof, and flies through the air filled with hundreds of people.

I’ve often seen people on Lemmy confidently state that current “AI” thinks and learns exactly like humans and that LLMs work exactly like human brains, etc.

Are you sure this wasn’t just people stating that when it comes to training on art there is no functional difference in the sense that both humans and AI need to see art to make it?

Weird, I don’t think I’ve ever seen that even remotely claimed.

Closest I think I’ve come is the argument that legally, AI learning systems are similar to how humans learn, namely storing information about information.

It’s usually some rant about “brains are just probability machines as well” or “every artists learns from thousands of pictures of other artists, just as image generator xy does”.

Which is a fair point, because AI has never meant “general AI”, it’s an umbrella term for a wide variety of intelligence like tasks as performed by computers.

Do you mean in the everyday sense or the academic sense? I think this is why there’s such grumbling around the topic. Academically speaking that may be correct, but I think for the general public, AI has been more muddled and presented in a much more robust, general AI way, especially in fiction. Look at any number of scifi movies featuring forms of AI, whether it’s the movie literally named AI or Terminator or Blade Runner or more recently Ex Machina.

Each of these technically may be presenting general AI, but for the public, it’s just AI. In a weird way, this discussion is sort of an inversion of what one usually sees between academics and the public. Generally academics are trying to get the public not to use technical terms loosely, yet here some of the public is trying to get some of the tech/academic sphere to not, at least as they think, use technical terms loosely.

Arguably it’s from a misunderstanding, but if anyone should understand the dynamics of language, you’d hope it would be those trying to calibrate machines to process language.

Well, that’s the issue at the heart of it I think.

How much should we cater our choice of words to those who know the least?I’m not an academic, and I don’t work with AI, but I do work with computers and I know the distinction between AI and general AI.

I have a little irritation at the theme, given I work in the security industry and it’s now difficult to use the more common abbreviation for cryptography without getting Bitcoin mixed up in everything.

All that aside, the point is that people talking about how it’s not “real AI” often come across as people who don’t know what they’re talking about, which was the point of the image.

All that aside, the point is that people talking about how it’s not “real AI” often come across as people who don’t know what they’re talking about, which was the point of the image.

The funny part is, as I mention in my comment, isn’t that how both parties to these conversations feel? The problem is they’re talking past each other, but the worst part is, arguably the more educated participant should be more apt to recognize this and clarify or better yet, ask for clarification so they can see where the disconnect is emerging to improve communication.

Also, let’s remember that it’s not the laypeople describing the technology in general personified terms like “learning” or “hallucinating”, which furthers some of the grumbling.

Well, I don’t generally expect an academic level of discourse out of image macros found on the Internet.

Usually when I see people talking about it, I do see people making clarifying comments and asking questions like you describe. Sorta like when I described how AI is an umbrella term.I’m not sure I’d say that learning and hallucinating are personified terms. We see both of those out of any organism complex enough to have something that works like a nervous system, for example.

I believe OP is attempting to take on an army of straw men in the form of a poorly chosen meme template.

No people say this constantly it’s not just a strawman

I think OP implied that AI is neat.

I guess that no matter what they are or what you call them they still can be useful

Pretty sure the meme format is for something you get extremely worked up about and want to passionately tell someone, even in inappropriate moments, but no one really gives a fuck

People who don’t understand or use AI think it’s less capable than it is and claim it’s not AGI (which no one else was saying anyways) and try to make it seem like it’s less valuable because it’s “just using datasets to extrapolate, it doesn’t actually think.”

Guess what you’re doing right now when you “think” about something? That’s right, you’re calling up the thousands of experiences that make up your “training data” and using it to extrapolate on what actions you should take based on said data.

You know how to parallel park because you’ve assimilated road laws, your muscle memory, and the knowledge of your cars wheelbase into a single action. AI just doesn’t have sapience and therefore cannot act without input, but the process it does things with is functionally similar to how we make decisions, the difference is the training data gets input within seconds as opposed to being built over a lifetime.

People who aren’t programmers, haven’t studied computer science, and don’t understand LLMs are much more impressed by LLMs.

That’s true of any technology. As someone who is a programmer, has studied computer science, and does understand LLMs, this represents a massive leap in capability. Is it AGI? No. Is it a potential paradigm shift? Yes. This isn’t pure hype like Crypto was, there is a core of utility here.

Crypto was never pure hype either. Decentralized currency is an important thing to have, it’s just shitty it turned into some investment speculative asset rather than a way to buy drugs online without the glowies looking

Crypto solves a few theoretical problems and creates a few real ones

Yeah I studied CS and work in IT Ops, I’m not claiming this shit is Cortana from Halo, but it’s also not NFTs. If you can’t see the value you haven’t used it for anything serious, cause it’s taking jobs left and right.

In my experience it’s the opposite, but the emotional reaction isn’t so much being impressed as being afraid and claiming it’s just all plagiarism

If you’ve ever actually used any of these algorithms it becomes painfully obvious they do not “think”. Give it a task slightly more complex/nuanced than what it has been trained on and you will see it draws obviously false conclusions that would be obviously wrong had any thought actual taken place. Generalization is not something they do, which is a fundamental part of human problem solving.

Make no mistake: they are text predictors.

Depends on what you mean by general intelligence. I’ve seen a lot of people confuse Artificial General Intelligence and AI more broadly. Even something as simple as the K-nearest neighbor algorithm is artificial intelligence, as this is a much broader topic than AGI.

Wikipedia gives two definitions of AGI:

An artificial general intelligence (AGI) is a hypothetical type of intelligent agent which, if realized, could learn to accomplish any intellectual task that human beings or animals can perform. Alternatively, AGI has been defined as an autonomous system that surpasses human capabilities in the majority of economically valuable tasks.

If some task can be represented through text, an LLM can, in theory, be trained to perform it either through fine-tuning or few-shot learning. The question then is how general do LLMs have to be for one to consider them to be AGIs, and there’s no hard metric for that question.

I can’t pass the bar exam like GPT-4 did, and it also has a lot more general knowledge than me. Sure, it gets stuff wrong, but so do humans. We can interact with physical objects in ways that GPT-4 can’t, but it is catching up. Plus Stephen Hawking couldn’t move the same way that most people can either and we certainly wouldn’t say that he didn’t have general intelligence.

I’m rambling but I think you get the point. There’s no clear threshold or way to calculate how “general” an AI has to be before we consider it an AGI, which is why some people argue that the best LLMs are already examples of general intelligence.

Depends on what you mean by general intelligence. I’ve seen a lot of people confuse Artificial General Intelligence and AI more broadly. Even something as simple as the K-nearest neighbor algorithm is artificial intelligence, as this is a much broader topic than AGI.

Well, I mean the ability to solve problems we don’t already have the solution to. Can it cure cancer? Can it solve the p vs np problem?

And by the way, wikipedia tags that second definition as dubious as that is the definition put fourth by OpenAI, who again, has a financial incentive to make us believe LLMs will lead to AGI.

Not only has it not been proven whether LLMs will lead to AGI, it hasn’t even been proven that AGIs are possible.

If some task can be represented through text, an LLM can, in theory, be trained to perform it either through fine-tuning or few-shot learning.

No it can’t. If the task requires the LLM to solve a problem that hasn’t been solved before, it will fail.

I can’t pass the bar exam like GPT-4 did

Exams often are bad measures of intelligence. They typically measure your ability to consume, retain, and recall facts. LLMs are very good at that.

Ask an LLM to solve a problem without a known solution and it will fail.

We can interact with physical objects in ways that GPT-4 can’t, but it is catching up. Plus Stephen Hawking couldn’t move the same way that most people can either and we certainly wouldn’t say that he didn’t have general intelligence.

The ability to interact with physical objects is very clearly not a good test for general intelligence and I never claimed otherwise.

I know the second definition was proposed by OpenAI, who obviously has a vested interest in this topic, but that doesn’t mean it can’t be a useful or informative conceptualization of AGI, after all we have to set some threshold for the amount of intelligence AI needs to display and in what areas for it to be considered an AGI. Their proposal of an autonomous system that surpasses humans in economically valuable tasks is fairly reasonable, though it’s still pretty vague and very much debatable, which is why this isn’t the only definition that’s been proposed.

Your definition is definitely more peculiar as I’ve never seen anyone else propose something like it, and it also seems to exclude humans since you’re referring to problems we can’t solve.

The next question then is what problems specifically AI would need to solve to fit your definition, and with what accuracy. Do you mean solve any problem we can throw at it? At that point we’d be going past AGI and now we’re talking about artificial superintelligence…

Not only has it not been proven whether LLMs will lead to AGI, it hasn’t even been proven that AGIs are possible.

By your definition AGI doesn’t really seem possible at all. But of course, your definition isn’t how most data scientists or people in general conceptualize AGI, which is the point of my comment. It’s very difficult to put a clear-cut line on what AGI is or isn’t, which is why there are those like you who believe it will never be possible, but there are also those who argue it’s already here.

No it can’t. If the task requires the LLM to solve a problem that hasn’t been solved before, it will fail.

Ask an LLM to solve a problem without a known solution and it will fail.

That’s simply not true. That’s the whole point of the concept of generalization in AI and what the few-shot and zero-shot metrics represent - LLMs solving problems represented in text with few or no prior examples by reasoning beyond what they saw in the training data. You can actually test this yourself by simply signing up to use ChatGPT since it’s free.

Exams often are bad measures of intelligence. They typically measure your ability to consume, retain, and recall facts. LLMs are very good at that.

So are humans. We’re also deterministic machines that output some action depending on the inputs we get through our senses, much like an LLM outputs some text depending on the inputs it received, plus as I mentioned they can reason beyond what they’ve seen in the training data.

The ability to interact with physical objects is very clearly not a good test for general intelligence and I never claimed otherwise.

I wasn’t accusing you of anything, I was just pointing out that there are many things we can argue require some degree of intelligence, even physical tasks. The example in the video requires understanding the instructions, the environment, and how to move the robotic arm in order to complete new instructions.

I find LLMs and AGI interesting subjects and was hoping to have a conversation on the nuances of these topics, but it’s pretty clear that you just want to turn this into some sort of debate to “debunk” AGI, so I’ll be taking my leave.

IME when you prompt an LLM to solve a new problem it usually just makes up a bunch of complete bullshit that sounds good but doesn’t mean anything.

I agree, there is no formal definition for AGI so a bit silly to discuss that really. Funnily enough I inadvertantly wrote the nearest neighbour algorithm to model swarming behavour back when I was an undergrad and didn’t even consider it rudimentary AI.

Can I ask what your take on the possibility of neural networks understanding what they are doing is?

Yes refreshing to see someone a little literate here thanks for fighting the misinformation man

Can your calculator only serve problems you already solved? I really don’t buy that take

Llms are in fact not at all good at retaining facts, it’s one of the most worked on problems for them

Llms can solve novel problems. It’s actually much more complex than just a lookup robot, which we already have for such tasks

You just take wild guesstimates on how they work and it just feels wrong to me to not point that out

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

It depends a lot on how we perceive “intelligence”. It’s a lot more vague of a term than most, so people have very different views of it. Some people might have the idea of it meaning the response to stimuli & the output (language or art or any other form) being indistinguishable from humans. But many people may also agree that whales/dolphins have the same level of, or superior, “intelligence” to humans. The term is too vague to really prescribe with confidence, and more importantly people often use it to mean many completely different concepts (“intelligence” as a measurable/quantifiable property of either how quickly/efficiently a being can learn or use knowledge or more vaguely its “capacity to reason”, “intelligence” as the idea of “consciousness” in general, “intelligence” to refer to amount of knowledge/experience one currently has or can memorize, etc.)

In computer science “artificial intelligence” has always simply referred to a program making decisions based on input. There was never any bar to reach for how “complex” it had to be to be considered AI. That’s why minecraft zombies or shitty FPS bots are “AI”, or a simple algorithm made to beat table games are “AI”, even though clearly they’re not all that smart and don’t even “learn”.

Even sentience is on a scale. Even cows or dogs or parrots or crows are sentient, but not as much as we are. Computers are not sentient yet, but one day they will be. And then soon after they will be more sentient than us. They’ll be able to see their own brains working, analyze their own thoughts and emotions(?) in real time and be able to achieve a level of self reflection and navel gazing undreamed of by human minds! :D

The damn Viet Cong 😒

Only 2 people on the server left alive, knife fight in the center

OP didn’t say general intelligence. LLMs mimic what actually intelligent beings do, AKA artificial intelligence.

Claiming AGI is the only “real” AI is like claiming Swiss army knives are the only “real” knives. It’s just silly.

But also the people who seem to think we need a magic soul to perform useful work is way way too high.

The main problem is Idiots seem to have watched one too many movies about robots with souls and gotten confused between real life and fantasy - especially shitty journalists way out their depth.

This big gotcha ‘they don’t live upto the hype’ is 100% people who heard ‘ai’ and thought of bad Will Smith movies. LLMs absolutely live upto the actual sensible things people hoped and have exceeded those expectations, they’re also incredibly good at a huge range of very useful tasks which have traditionally been considered as requiring intelligence but they’re not magically able everything, of course they’re not that’s not how anyone actually involved in anything said they would work or expected them to work.

No idea why you’re downvoted. This is correct.

Yes. But the more advanced LLMs get, the less it matters in my opinion. I mean of you have two boxes, one of which is actually intelligent and the other is “just” a very advanced parrot - it doesn’t matter, given they produce the same output. I’m sure that already LLMs can surpass some humans, at least at certain disciplines. In a couple years the difference of a parrot-box and something actually intelligent will only merely show at the very fringes of massively complicated tasks. And that is way beyond the capability threshold that allows to do nasty stuff with it, to shed a dystopian light on it.

I mean of you have two boxes, one of which is actually intelligent and the other is “just” a very advanced parrot - it doesn’t matter, given they produce the same output.

You’re making a huge assumption; that an advanced parrot produces the same output as something with general intelligence. And I reject that assumption. Something with general intelligence can produce something novel. An advanced parrot can only repeat things it’s already heard.

How do you define novel? Because LLMs absolutely have produced novel data.

LLMs can’t produce anything without being prompted by a human. There’s nothing intelligent about them. Imo it’s an abuse of the word intelligence since they have exactly zero autonomy.

I use LLMs to create things no human has likely ever said and it’s great at it, for example

‘while juggling chainsaws atop a unicycle made of marshmallows, I pondered the existential implications of the colour blue on a pineapples dream of becoming a unicorn’

When I ask it to do the same using neologisms the output is even better, one of the words was exquimodal which I then asked for it to invent an etymology and it came up with one that combined excuistus and modial to define it as something beyond traditional measures which fits perfectly into the sentence it created.

You can’t ask a parrot to invent words with meaning and use them in context, that’s a step beyond repetition - of course it’s not full dynamic self aware reasoning but it’s certainly not being a parrot

Producing word salad really isn’t that impressive. At least the art LLMs are somewhat impressive.

If you ask it to make up nonsense and it does it then you can’t get angry lol. I normally use it to help analyse code or write sections of code, sometimes to teach me how certain functions or principles work - it’s incredibly good at that, I do need to verify it’s doing the right thing but I do that with my code too and I’m not always right either.

As a research tool it’s great at taking a basic dumb description and pointing me to the right things to look for, especially for things with a lot of technical terms and obscure areas.

And yes they can occasionally make mistakes or invent things but if you ask properly and verify what you’re told then it’s pretty reliable, far more so than a lot of humans I know.

The difference is that you can throw enough bad info at it that it will start paroting that instead of factual information because it doesn’t have the ability to criticize the information it receives whereas an human can be told that the sky is purple with orange dots a thousand times a day and it will always point at the sky and tell you “No.”

To make the analogy actually comparable the human in question would need to be learning about it for the first time (which is analogous to the training data) and in that case you absolutely could convince the small child of that. Not only would they believe it if told enough times by an authority figure, you could convince them that the colors we see are different as well, or something along the lines of giving them bad data.

A fully trained AI will tell you that you’re wrong if you told it the sky was orange, it’s not going to just believe you and start claiming it to everyone else it interacts with. It’s been trained to know the sky is blue and won’t deviate from that outside of having its training data modified. Which is like brainwashing an adult human, in which case yeah you absolutely could have them convinced the sky is orange. We’ve got plenty of information on gaslighting, high control group and POW psychology to back that up too.

Feed LLMs all new data that’s false and it will regurgitate it as being true even if it had previously been fed information that contradicts it, it doesn’t make the difference between the two because there’s no actual analysis of what’s presented. Heck, even without intentionally feeding them false info, LLMs keep inventing fake information.

Feed an adult new data that’s false and it’s able to analyse it and make deductions based on what they know already.

We don’t compare it to a child or to someone that was brainwashed because it makes no sense to do so and it’s completely disingenuous. “Compare it to the worst so it has a chance to win!” Hell no, we need to compare it to the people that are references in their field because people will now be using LLMs as a reference!

Ha ha yeah humans sure are great at not being convinced by the opinions of other people, that’s why religion and politics are so simple and society is so sane and reasonable.

Helen Keller would belive you it’s purple.

If humans didn’t have eyes they wouldn’t know the colour of the sky, if you give an ai a colour video feed of outside then it’ll be able to tell you exactly what colour the sky is using a whole range of very accurate metrics.

This is one of the worst rebuttals I’ve seen today because you aren’t addressing the fact that the LLM has zero awareness of anything. It’s not an intelligence and never will be without additional technologies built on top of it.

Why would I rebut that? I’m simply arguing that they don’t need to be ‘intelligent’ to accurately determine the colour of the sky and that if you expect an intelligence to know the colour of the sky without ever seeing it then you’re being absurd.

The way the comment I responded to was written makes no sense to reality and I addressed that.

Again as I said in other comments you’re arguing that an LLM is not will smith in I Robot and or Scarlett Johansson playing the role of a usb stick but that’s not what anyone sane is suggesting.

A fork isn’t great for eating soup, neither is a knife required but that doesn’t mean they’re not incredibly useful eating utensils.

Try thinking of an LLM as a type of NLP or natural language processing tool which allows computers to use normal human text as input to perform a range of tasks. It’s hugely useful and unlocks a vast amount of potential but it’s not going to slap anyone for joking about it’s wife.

How come all LLMs keep inventing facts and telling false information then?

People do that too, actually we do it a lot more than we realise. Studies of memory for example have shown we create details that we expect to be there to fill in blanks and that we convince ourselves we remember them even when presented with evidence that refutes it.

A lot of the newer implementations use more complex methods of fact verification, it’s not easy to explain but essentially it comes down to the weight you give different layers. GPT 5 is already training and likely to be out around October but even before that we’re seeing pipelines using LLM to code task based processes - an LLM is bad at chess but could easily install stockfish in a VM and beat you every time.

I find this line of thinking tedious.

Even if LLM’s can’t be said to have ‘true understanding’ (however you’re choosing to define it), there is very little to suggest they should be able to

understandpredict the correct response to a particular context, abstract meaning, and intent with what primitive tools they were built with.If there’s some as-yet uncrossed threshold to a bare-minimum ‘understanding’, it’s because we simply don’t have the language to describe what that threshold is or know when it has been crossed. If the assumption is that ‘understanding’ cannot be a quality granted to a transformer-based model -or even a quality granted to computers generally- then we need some other word to describe what LLM’s are doing, because ‘predicting the next-best word’ is an insufficient description for what would otherwise be a slight-of-hand trick.

There’s no doubt that there’s a lot of exaggerated hype around these models and LLM companies, but some of these advancements published in 2022 surprised a lot of people in the field, and their significance shouldn’t be slept on.

Certainly don’t trust the billion-dollar companies hawking their wares, but don’t ignore the technology they’re building, either.

You are best off thinking of LLMs as highly advanced auto correct. They don’t know what words mean. When they output a response to your question the only process that occurred was “which words are most likely to come next”.

And we all know how often auto correct is wrong

Yep. Been having trouble with mine recently, it’s managed to learn my typos and it’s getting quite frustrating

deleted by creator

deleted by creator

That’s only true on a very basic level, I understand that Turings maths is complex and unintuitive even more so than calculus but it’s a very established fact that relatively simple mathematical operations can have emergent properties when they interact to have far more complexity than initially expected.

The same way the giraffe gets its spots the same way all the hardware of our brain is built, a strand of code is converted into physical structures that interact and result in more complex behaviours - the actual reality is just math, and that math is almost entirely just probability when you get down to it. We’re all just next word guessing machines.

We don’t guess words like a Markov chain instead use a rather complex token system in our brain which then gets converted to words, LLMs do this too - that’s how they can learn about a subject in one language then explain it in another.

Calling an LLM predictive text is a fundamental misunderstanding of reality, it’s somewhat true on a technical level but only when you understand that predicting the next word can be a hugely complex operation which is the fundamental math behind all human thought also.

Plus they’re not really just predicting one word ahead anymore, they do structured generation much like how image generators do - first they get the higher level principles to a valid state then propagate down into structure and form before making word and grammar choices. You can manually change values in the different layers and see the output change, exploring the latent space like this makes it clear that it’s not simply guessing the next word but guessing the next word which will best fit into a required structure to express a desired point - I don’t know how other people are coming up with sentences but that feels a lot like what I do

LLMs don’t “learn” they literally don’t have the capacity to “learn”. We train them on an insane amount of text and then the LLMs job is to produce output that looks like that text. That’s why when you attempt to correct it nothing happens. It can’t learn, it doesn’t have the capacity to.

Humans aren’t “word guessing machines”. Humans produce language with intent and meaning. This is why you and I can communicate. We use language to represent things. When I say “Tree” you know what that is because it’s the word we use to describe an object we all know about. LLMs don’t know what a tree is. They can use “tree” in a sentence correctly but they don’t know what it means. They can even translate it to another language but they still don’t know what “tree” means. What they know is generating text that looks like what they were trained on.

Here’s a well made video by Kyle Hill that will teach you lot better than I could

Here is an alternative Piped link(s):

Here’s a well made video by Kyle Hill

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Even if LLM’s can’t be said to have ‘true understanding’ (however you’re choosing to define it), there is very little to suggest they should be able to

understandpredict the correct response to a particular context, abstract meaning, and intent with what primitive tools they were built with.Did you mean “shouldn’t”? Otherwise I’m very confused by your response

No, i mean ‘should’, as in:

There’s no reason to expect a program that calculates the probability of the next most likely word in a sentence should be able to do anything more than string together an incoherent sentence, let alone correctly answer even an arbitrary question

It’s like using a description for how covalent bonds are formed as an explanation for how it is you know when you need to take a shit.

Fair enough, that just seemed to be the opposite point that the rest of your post was making so seemed like a typo.

I don’t think so…

Knowing that LLMs are just “parroting” is one of the first steps to implementing them in safe, effective ways where they can actually provide value.

LLMs definitely provide value its just debatable whether they’re real AI or not. I believe they’re going to be shoved in a round hole regardless.

I think a better way to view it is that it’s a search engine that works on the word level of granularity. When library indexing systems were invented they allowed us to look up knowledge at the book level. Search engines allowed look ups at the document level. LLMs allow lookups at the word level, meaning all previously transcribed human knowledge can be synthesized into a response. That’s huge, and where it becomes extra huge is that it can also pull on programming knowledge allowing it to meta program and perform complex tasks accurately. You can also hook them up with external APIs so they can do more tasks. What we have is basically a program that can write itself based on the entire corpus of human knowledge, and that will have a tremendous impact.

The next step is to understand much more and not get stuck on the most popular semantic trap

Then you can begin your journey man

There are so, so many llm chains that do way more than parrot. It’s just the last popular catchphrase.

Very tiring to keep explaining that because just shallow research can make you understand more than it’s a parrot comment. We are all parrots. It’s extremely irrelevant to the ai safety and usefulness debates

Most llm implementations use frameworks to just develop different understandings, and it’s shit, but it’s just not true that they only parrot known things they have internal worlds especially when looking at agent networks

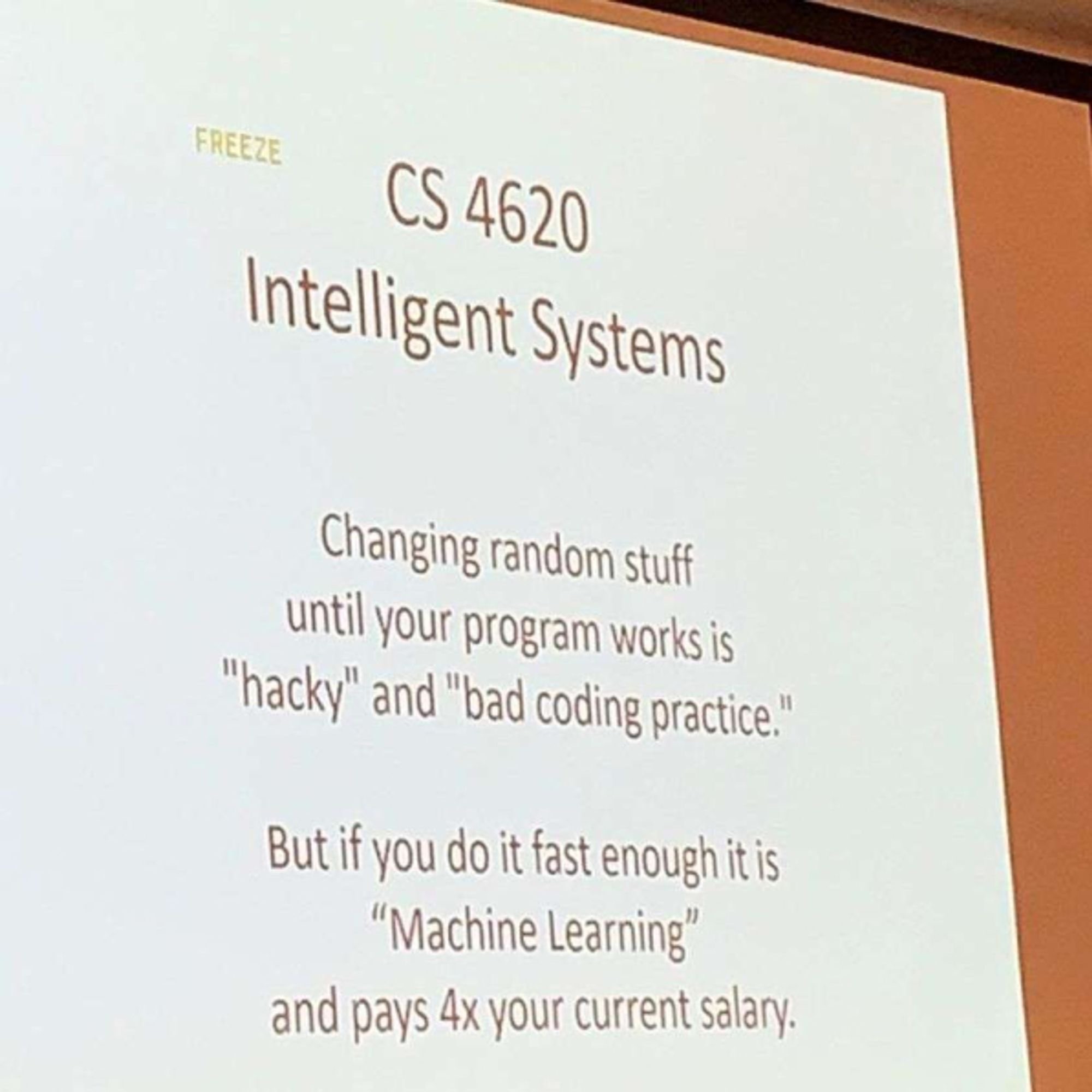

Reminds me of this meme I saw somewhere around here the other week

deleted by creator

Well, college is already dry enough as it is, you gotta appreciate it when your instructor has a sense of humor.

It’s from when we used generic algorithms

I think LLMs are neat, and Teslas are neat, and HHO generators are neat, and aliens are neat…

…but none of them live up to all of the claims made about them.

HHO generators

…What are these? Something to do with hydrogen? Despite it not making sense for you to write it that way if you meant H2O, I really enjoy the silly idea of a water generator (as in, making water, not running off water).

HHO generators are a car mod that some backyard scientists got into, but didn’t actually work. They involve cracking hydrogen from water, and making explosive gasses some claimed could make your car run faster. There’s lots of YouTube videos of people playing around with them. Kinda dangerous seeming… Still neat.

Thanks! I hadn’t heard of this before, hydrogen fueled cars, sure, but not this. 😄

They’re predicting the next word without any concept of right or wrong, there is no intelligence there. And it shows the second they start hallucinating.

They are a bit like you’d take just the creative writing center of a human brain. So they are like one part of a human mind without sentience or understanding or long term memory. Just the creative part, even though they are mediocre at being creative atm. But it’s shocking because we kind of expected that to be the last part of human minds to be able to be replicated.

Put enough of these “parts” of a human mind together and you might get a proper sentient mind sooner than later.

Exactly. Im not saying its not impressive or even not useful, but one should understand the limitation. For example you can’t reason with an llm in a sense that you could convince it of your reasoning. It will only respond how most people in the used dataset would have responded (obiously simplified)

You repeat your point but there already was agreement that this is how ai is now.

I fear you may have glanced over the second part where he states that once we simulated other parts of the brain things start to look different very quickly.

There do seem to be 2 kind of opinions on ai.

-

those that look at ai in the present compared to a present day human. This seems to be the majority of people overall

-

those that look at ai like a statistic, where it was in the past, what improved it and project within reason how it will start to look soon enough. This is the majority of people that work in the ai industry.

For me a present day is simply practice for what is yet to come. Because if we dont nuke ourselves back to the stone age. Something, currently undefinable, is coming.

What i fear is AI being used with malicious intent. Corporations that use it for collecting data for example. Or governments just putting everyone in jail that they are told by an ai

I’d expect governments to use it to craft public relation strategies. An extension of what they do now by hiring the smartest sociopaths on the planet. Not sure if this would work but I think so. Basically you train an AI on previous messaging and results from polls or voting. And then you train it to suggest strategies to maximize for support for X. A kind of dumbification of the masses. Of course it’s only going to get shittier from there on out.

I didn’t, I just focused on how it is today. I think it can become very big and threatening but also helpful, but that’s just pure speculation at this point :)

-

…or you might not.

It’s fun to think about but we don’t understand the brain enough to extrapolate AIs in their current form to sentience. Even your mention of “parts” of the mind are not clearly defined.

There are so many potential hidden variables. Sometimes I think people need reminding that the brain is the most complex thing in the universe, we don’t full understand it yet and neural networks are just loosely based on the structure of neurons, not an exact replica.

True it’s speculation. But before GPT3 I never imagined AI achieving creativity. No idea how you would do it and I would have said it’s a hard problem or like magic, and poof now it’s a reality. A huge leap in quality driven just by quantity of data and computing. Which was shocking that it’s “so simple” at least in this case.

So that should tell us something. We don’t understand the brain but maybe there isn’t much to understand. The biocomputing hardware is relatively clear how it works and it’s all made out of the same stuff. So it stands to reason that the other parts or function of a brain might also be replicated in similar ways.

Or maybe not. Or we might need a completely different way to organize and train other functions of a mind. Or it might take a much larger increase in speed and memory.

You say maybe there’s not much to understand about the brain but I entirely disagree, it’s the most complex object in the known universe and we haven’t discovered all of it’s secrets yet.

Generating pictures from a vast database of training material is nowhere near comparable.

Ok, again I’m just speculating so I’m not trying to argue. But it’s possible that there are no “mysteries of the brain”, that it’s just irreducible complexity. That it’s just due to the functionality of the synapses and the organization of the number of connections and weights in the brain? Then the brain is like a computer you put a program in. The magic happens with how it’s organized.

And yeah we don’t know how that exactly works for the human brain, but maybe it’s fundamentally unknowable. Maybe there is never going to be a language to describe human consciousness because it’s entirely born out of the complexity of a shit ton of simple things and there is no “rhyme or reason” if you try to understand it. Maybe the closest we get are the models psychology creates.

Then there is fundamentally no difference between painting based on a “vast database of training material” in a human mind and a computer AI. Currently AI generated images is a bit limited in creativity and it’s mediocre but it’s there.

Then it would logically follow that all the other functions of a human brain are similarly “possible” if we train it right and add enough computing power and memory. Without ever knowing the secrets of the human brain. I’d expect the truth somewhere in the middle of those two perspectives.

Another argument in favor of this would be that the human brain evolved through evolution, through random change that was filtered (at least if you do not believe in intelligent design). That means there is no clever organizational structure or something underlying the brain. Just change, test, filter, reproduce. The worst, most complex spaghetti code in the universe. Code written by a moron that can’t be understood. But that means it should also be reproducible by similar means.

Possible, yes. It’s also entirely possible there’s interactions we are yet to discover.

I wouldn’t claim it’s unknowable. Just that there’s little evidence so far to suggest any form of sentience could arise from current machine learning models.

That hypothesis is not verifiable at present as we don’t know the ins and outs of how consciousness arises.

Then it would logically follow that all the other functions of a human brain are similarly “possible” if we train it right and add enough computing power and memory. Without ever knowing the secrets of the human brain. I’d expect the truth somewhere in the middle of those two perspectives.

Lots of things are possible, we use the scientific method to test them not speculative logical arguments.

Functions of the brain

These would need to be defined.

But that means it should also be reproducible by similar means.

Can’t be sure of this… For example, what if quantum interactions are involved in brain activity? How does the grey matter in the brain affect the functioning of neurons? How do the heart/gut affect things? Do cells which aren’t neurons provide any input? Does some aspect of consciousness arise from the very material the brain is made of?

As far as I know all the above are open questions and I’m sure there are many more. But the point is we can’t suggest there is actually rudimentary consciousness in neural networks until we have pinned it down in living things first.

I have a silly little model I made for creating Vogoon poetry. One of the models is fed from Shakespeare. The system works by predicting the next letter rather than the next word (and whitespace is just another letter as far as it’s concerned). Here’s one from the Shakespeare generation:

KING RICHARD II:

Exetery in thine eyes spoke of aid.

Burkey, good my lord, good morrow now: my mother’s said

This is silly nonsense, of course, and for its purpose, that’s fine. That being said, as far as I can tell, “Exetery” is not an English word. Not even one of those made-up English words that Shakespeare created all the time. It’s certainly not in the training dataset. However, it does sound like it might be something Shakespeare pulled out of his ass and expected his audience to understand through context, and that’s interesting.

Wow, sounds amazing, big probs to you! Are you planning on releasing the model? Would be interested tbh :D

Nothing special about it, really. I only followed this TensorFlow tutorial:

https://www.tensorflow.org/text/tutorials/text_generation

The Shakespeare dataset is on there. I also have another mode that uses entries from the Joyce Kilmer Memorial Bad Poetry Contest, and also some of the works of William Topaz McGonagall (who is basically the Tommy Wiseau of 19th century English poetry). The code is the same between them, however.

Nice, thx

…yeah dude. Hence artificial intelligence.

There aren’t any cherries in artificial cherry flavoring either 🤷♀️ and nobody is claiming there is

I feel like our current “AIs” are like the Virtual Intelligences in Mass Effect. They can perform some tasks and hold a conversation, but they aren’t actually “aware”. We’re still far off from a true AI like the Geth or EDI.

I wish we called them VI’s. It was a good distinction in their ability.

Though honestly I think our AI is more advanced in conversation than a VI in ME.

This was the first thing that came to my mind as well and VI is such an apt term too. But since we live in the shittiest timeline Electronic Arts would probably have taken the Blizzard/Nintendo route too and patented the term.

I wish there was a term without “Intelligence” in it because LLMs aren’t intelligent.

“AI” is always reserved for the latest tech in this space, the previous gens are called what they are. LMMs will be what these are called after a new iteration is out.

I love reading Geth references in the wild.

The way I’ve come to understand it is that LLMs are intelligent in the same way your subconscious is intelligent.

It works off of kneejerk “this feels right” logic, that’s why images look like dreams, realistic until you examine further.

We all have a kneejerk responses to situations and questions, but the difference is we filter that through our conscious mind, to apply long-term thinking and our own choices into the mix.

LLMs just keep getting better at the “this feels right” stage, which is why completely novel or niche situations can still trip it up; because it hasn’t developed enough “reflexes” for that problem yet.

LLMs are intelligent in the same way books are intelligent. What makes LLMs really cool is that instead of searching at the book or page granularity, it searches at the word granularity. It’s not thinking, but all the thinking was done for it already by humans who encoded their intelligence into words. It’s still incredibly powerful, at it’s best it could make it so no task ever needs to be performed by a human twice which would have immense efficiency gains for anything information based.

ah, yes, prejudice

They also reason which is really wierd

EXACTLY. there is no problem solving either (except that to calculate the most probable text)

Even worse is some of my friends say that alexa is A.I.

… Alexa literally is A.I.? You mean to say that Alexa isn’t AGI. AI is the taking of inputs and outputting something rational. The first AI’s were just large if-else complications called First Order Logic. Later AI utilized approximate or brute force state calculations such as probabilistic trees or minimax search. AI controls how people’s lines are drawn in popular art programs such as Clip Studio when they use the helping functions. But none of these AI could tell me something new, only what they’re designed to compute.

The term AI is a lot more broad than you think.

The term AI being used by corporations isn’t some protected and explicit categorization. Any software company alive today, selling what they call AI, isn’t being honest about it. It’s a marketing gimmick. The same shit we fall for all the time. “Grass fed” meat products aren’t actually 100% grass fed at all. “Healthy: Fat Free!” foods just replace the fat with sugar and/or corn syrup. Women’s dress sizes are universally inconsistent across all clothing brands in existence.

If you trust a corporation to tell you that their product is exactly what they market it as, you’re only gullible. It’s forgivable. But calling something AI when it’s clearly not, as if the term is so broad it can apply to any old if-else chain of logic, is proof that their marketing worked exactly as intended.

The term AI is older than the idea of machine learning. AI is a rectangle where machine learning is a square. And deep learning is a unit square.

Please, don’t muddy the waters. That’s what caused the AI winter of 1960. But do go after the liars. I’m all for that.

The term AI is a lot more broad than you think.

That is precisely what I dislike. It’s kinda like calling those crappy scooter thingies “hoverboards”. It’s just a marketing term. I simply oppose the use of “AI” for the weak kinds of AI we have right now and I’d prefer “AI” to only refer to strong AI. Though that is of course not within my power to force upon people and most people seem to not care one bit, so eh 🤷🏼♂️

The term AI is older than the idea of machine learning. AI is a rectangle where machine learning is a square. And deep learning is a unit square.

Please, don’t muddy the waters. That’s what caused the AI winter of 1960. But do go after the liars. I’m all for that.

I think there is a difference in definition between us… I would define a proper ai as intelligent meaning they have the ability to problem solve

I still don’t follow your logic. You say that GPT has no ability to problem solve, yet it clearly has the ability to solve problems? Of course it isn’t infallible, but neither is anything else with the ability to solve problems. Can you explain what you mean here in a little more detail.

One of the most difficult problems that AI attempts to solve in the Alexa pipeline is, “What is the desired intent of the received command?” To give an example of the purpose of this question, as well as how Alexa may fail to answer it correctly: I have a smart bulb in a fixture, and I gave it a human name. When I say,” “Alexa, make Mr. Smith white,” one of two things will happen, depending on the current context (probably including previous commands, tone, etc.):

- It will change the color of the smart bulb to white

- It will refuse to answer, assuming that I’m asking it to make a person named Josh… white.

It’s an amusing situation, but also a necessary one: there will always exist contexts in which always selecting one response over the other would be incorrect.

See that’s hard to define. What i mean is things like reasoning and understanding. Let’s take your example as an… Example. Obviously you can’t turn a person white so they probably mean the led. Now you could ask if they meant the led but it’s not critical so let’s just do it and the person will complain if it’s wrong. Thing is yes you can train an ai to act like this but in the end it doesn’t understand what it’s doing, only (maybe) if it did it right ir wrong. Like chat gpt doesn’t understand what it’s saying. It cannot grasp concepts, it can only try to emulate understanding although it doesn’t know how or even what understanding is. In the end it’s just a question of the complexity of the algorithm (cause we are just algorithms too) and i wouldn’t consider current “AI” to be complex enough to be called intelligent

(Sorry if this a bit on the low quality side in terms of readibility and grammer but this was hastily written under a bit of time pressure)

Obviously you can’t turn a person white so they probably mean the led.

This is true, but it still has to distinguish between facetious remarks and genuine commands. If you say, “Alexa, go fuck yourself,” it needs to be able to discern that it should not attempt to act on the input.

Intelligence is a spectrum, not a binary classification. It is roughly proportional to the complexity of the task and the accuracy with which the solution completes the task correctly. It is difficult to quantify these metrics with respect to the task of useful language generation, but at the very least we can say that the complexity is remarkable. It also feels prudent to point out that humans do not know why they do what they do unless they consciously decide to record their decision-making process and act according to the result. In other words, when given the prompt “solve x^2-1=0 for x”, I can instinctively answer “x = {+1, -1}”, but I cannot tell you why I answered this way, as I did not use the quadratic formula in my head. Any attempt to explain my decision process later would be no more than an educated guess, susceptible to similar false justifications and hallucinations that GPT experiences. I haven’t watched it yet, but I think this video may explain what I mean.

Edit: this is the video I was thinking of, from CGP Grey.

Hmm it seems like we have different perspectives. For example i cannot do something i don’t understand, meaning if i do a calculation in my head i can tell you exactly how i got there because i have to think through every step of the process. This starts at something as simple as 9 + 3 wher i have to actively think aboit the calculation, it goes like this in my head: 9 + 3… Take 1 from 3 add it to 9 = 10 + 2 = 12. This also applies to more complex things wich on one hand means i am regularly slower than my peers but i understand more stuff than them.

So i think because of our different… Thinking (?) We both lack a critical part in understanding each other’s view point

Anyhow back to ai.

Intelligence is a spectrum, not a binary classification

Yeah that’s the problem where does the spectrum start… Like i wouldn’t call a virus, bacteria or single cell intelligent, yet somehow a bunch of them is arguing about what intelligence is. i think this is just case of how you define intelligence, wich would vary from person to person. Also, I agree that llms are unfathomably complex. However i wouldn’t calssify them as intelligent, yet. In any case it was an interesting and fun conversation to have but i will end it here and go to sleep. Thanks for having an actual formal disagreement and not just immediately going for insults. Have a great day/night

And I wouldn’t call a human intelligent if TV was anything to go by. Unfortunately, humans do things they don’t understand constantly and confidently. It’s common place, and you could call it fake it until you make it, but a lot of times it’s more of people thinking they understand something.

LLMs do things confident that they will satisfy their fitness function, but they do not have the ability to see farther than that at this time. Just sounds like politics to me.

I’m being a touch facetious, of course, but the idea that the line has to be drawn upon that term, intelligence, is a bit too narrow for me. I prefer to use the terms Artificial Narrow Intelligence and Artificial General Intelligence as they are better defined. Narrow referring to it being designed for one task and one task only, such as LLMs which are designed to minimize a loss function of people accepting the output as “acceptable” language, which is a highly volatile target. AGI or Strong AI is AI that can generalize outside of its targeted fitness function and continuously. I don’t mean that a computer vision neural network that is able to classify anomalies as something that the car should stop for. That’s out of distribution reasoning, sure, but if it can reasonably determine the thing in bounds as part of its loss function, then anything that falls significantly outside can be easily flagged. That’s not true generalization, more of domain recognition, but it is important in a lot of safety critical applications.

This is an important conversation to have though. The way we use language is highly personal based upon our experiences, and that makes coming to an understanding in natural languages hard. Constructed languages aren’t the answer because any language in use undergoes change. If the term AI is to change, people will have to understand that the scientific term will not, and pop sci magazines WILL get harder to understand. That’s why I propose splitting the ideas in a way that allows for more nuanced discussions, instead of redefining terms that are present in thousands of ground breaking research papers over a century, which will make research a matter of historical linguistics as well as one of mathematical understanding. Jargon is already hard enough as it is.

Here is an alternative Piped link(s):

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

Alexa is AI. She’s artificially intelligent. Moreso than an ant or a pigeon, and I’d call those animals pretty smart.

Nobody is claiming there is problem solving in LLMs, and you don’t need problem solving skills to be artificially intelligent. The same way a knife doesn’t have to be a Swiss army knife to be called a “knife.”

I mean, people generally don’t have problem solving skills, yet we call them “intelligent” and “sentient” so…

There’s a lot more to intelligence and sentience than just problem solving. One of them is recalling data and effectively communicating it.

Recalling data, communication. Two things humans are notoriously bad at…

I just realized I interpreted your comment backwards the first time lol. When I wrote that I had “people don’t have issues with problem solving” in my head

If an LLM is just regurgitating information in a learned pattern and therefore it isn’t real intelligence, I have really bad news for ~80% of people.

I know a few people who would fit that definition

Like almost all politicians?

LLMs are a step towards AI in the same sense that a big ladder is a step towards the moon.

Been destroyed for this opinion here. Not many practicioners here just laymen and mostly techbros in this field… But maybe I haven’t found the right node?

I’m into local diffusion models and open source llms only, not into the megacorp stuff

If anything people really need to start experimenting beyond talking to it like its human or in a few years we will end up with a huge ai-illiterate population.

I’ve had someone fight me stubbornly talking about local llms as “a overhyped downloadable chatbot app” and saying the people on fossai are just a bunch of ai worshipping fools.

I was like tell me you now absolutely nothing you are talking about by pretending to know everything.

But the thing is it’s really fun and exciting to work with, the open source community is extremely nice and helpful, one of the most non toxic fields I have dabbled in! It’s very fun to test parameters tools and write code chains to try different stuff and it’s come a long way, it’s rewarding too because you get really fun responses

Aren’t the open source LLMs still censored though? I read someone make an off-hand comment that one of the big ones (OLLAMA or something?) was censored past version 1 so you couldn’t ask it to tell you how to make meth?

I don’t wanna make meth but if OSS LLMs are being censored already it makes having a local one pretty fucking pointless, no? You may as well just use ChatGPT. Pray tell me your thoughts?

Depends who and how the model was made. Llama is a meta product and its genuinely really powerful (i wonder where zuckerberg gets all the data for it)

Because its powerful you see many people use it as a starting point to develop their own ai ideas and systems. But its not the only decent open source model and the innovation that work for one model often work for all others so it doesn’t matter in the end.

Every single model used now will be completely outdated and forgotten in a year or 2. Even gpt4 en geminni

Holy crap didnt expect him to admit it this soon:

Zuck Brags About How Much of Your Facebook, Instagram Posts Will Power His AI

Could be legal issues, if an llm tells you how to make meth but gets a step or two wrong and results in your death, might be a case for the family to sue.

But i also don’t know what all you mean when you say censorship.

But i also don’t know what all you mean when you say censorship.

It was literally just that. The commentor I saw said something like "it’s censored after ver 1 so don’t expect it to tell you how to cook meth.

But when I hear the word “censored” I think of all the stuff ChatGPT refuses to talk about. It won’t write jokes about protected groups and VAST swathes of stuff around it. Like asking it to define “fag-got” can make it cough and refuse even though it’s a British food-stuff.

Blocking anything sexual - so no romantic/erotica novel writing.

The latest complaint about ChatGPT is it’s laziness which I can’t help feeling is due to over-zealous censorship. Censorship doesn’t just block the specific things but entirely innocent things (see fag-got above).

Want help writing a book about Hilter beoing seduced by a Jewish woman and BDSM scenes? No chance. No talking about Hitler, sex, Jewish people or BDSM. That’s censorship.

I’m using these as examples - I’ve no real interest in these but I am affected by annoyances and having to reword requests because they’ve been mis-interpreted as touching on censored subjects.

Just take a look at r/ChatGPT and you’ll see endless posts by people complaining they triggered it’s censorship over asinine prompts.

Oh ok, then yea that’s a problem, any censorship that’s not directly related to liability issues should be nipped in the bud.

No there are many uncensored ones as well

Have you ever considered you might be, you know, wrong?

No sorry you’re definitely 100% correct. You hold a well-reasoned, evidenced scientific opinion, you just haven’t found the right node yet.

Perhaps a mental gymnastics node would suit sir better? One without all us laymen and tech bros clogging up the place.

Or you could create your own instance populated by AIs where you can debate them about the origins of consciousness until androids dream of electric sheep?

Do you even understand my viewpoint?

Why only personal attacks and nothing else?

You obviously have hate issues, which is exactly why I have a problem with techbros explaining why llms suck.

They haven’t researched them or understood how they work.

It’s a fucking incredibly fast developing new science.

Nobody understands how it works.

It’s so silly to pretend to know how bad it works when people working with them daily discover new ways the technology surprises us. Idiotic to be pessimistic about such a field.

You obviously have hate issues

Says the person who starts chucking out insults the second they get downvoted.

From what I gather, anyone that disagrees with you is a tech bro with issues, which is quite pathetic to the point that it barely warrants a response but here goes…

I think I understand your viewpoint. You like playing around with AI models and have bought into the hype so much that you’ve completely failed to consider their limitations.

People do understand how they work; it’s clever mathematics. The tech is amazing and will no doubt bring numerous positive applications for humanity, but there’s no need to go around making outlandish claims like they understand or reason in the same way living beings do.

You consider intelligence to be nothing more than parroting which is, quite frankly, dangerous thinking and says a lot about your reductionist worldview.

You may redefine the word “understanding” and attribute it to an algorithm if you wish, but myself and others are allowed to disagree. No rigorous evidence currently exists that we can replicate any aspect of consciousness using a neural network alone.

You say pessimistic, I say realistic.

Haha it’s pure nonsense. Just do a little digging instead of doing the exact guesstimation I am talking about. You obviously don’t understand the field

Once again not offering any sort of valid retort, just claiming anyone that disagrees with you doesn’t understand the field.

I suggest you take a cursory look at how to argue in good faith, learn some maths and maybe look into how neural networks are developed. Then study some neuroscience and how much we comprehend the brain and maybe then we can resume the discussion.

You attack my viewpoint, but misunderstood it. I corrected you. Now you tell me I am wrong with my viewpoint (I am not btw) and start going down the idiotic path of bad faith conversation, while strawman arguing your own bad faith accusation, only because you are butthurt that you didn’t understand. Childish approach.

You don’t understand, because no expert currently understands these things completely. It’s pure nonsense defecation coming out of your mouth

You don’t really have one lol. You’ve read too many pop-sci articles from AI proponents and haven’t understood any of the underlying tech.

All your retorts boil down to copying my arguments because you seem to be incapable of original thought. Therefore it’s not surprising you believe neural networks are approaching sentience and consider imitation to be the same as intelligence.

You seem to think there’s something mystical about neural networks but there is not, just layers of complexity that are difficult for humans to unpick.

You argue like a religious zealot or Trump supporter because at this point it seems you don’t understand basic logic or how the scientific method works.

All the stuff on the dbzero instance is pro open source and pro piracy so fairly anti corpo and not tech illiterate

Thanks, I’ll join in

You’ve just described most people…

P-Zombies, all of them. I happen to be the only one to actually exist. What are the odds, right? But it’s true.

It figures you’d say it, it’s probably your algorithm trying to mess up with my mind!

Keep seething, OpenAI’s LLMs will never achieve AGI that will replace people

That was never the goal… You might as well say that a bowling ball will never be effectively used to play golf.

That was never the goal…

Most CEOs seem to not have got the memo…

They did, I think. It’s most of the general public that don’t know. CEOs just take advantage of this to sell shit.

I agree, but it’s so annoying when you work as IT and your non-IT boss thinks AI is the solution to every problem.

At my previous work I had to explain to my boss at least once a month why we can’t have AI diagnosing patients (at a dental clinic) or reading scans or proposing dental plans… It was maddening.

I find that these LLMs are great tools for a professional. So no, you still need the professional but it is handy if an ai would say, please check these places. A tool, not a replacemenrt.

Buddy, nobody ever said it would

Keep seething

Keep projecting

Next you’ll tell me that the enemies that I face in video games arent real AI either!

I said AGI deliberately…

As someone who has loves Asimov and read nearly all of his work.

I absolutely bloody hate calling LLM’s AI, without a doubt they are neat. But they are absolutely nothing in the ballpark of AI, and that’s okay! They weren’t trying to make a synethic brain, it’s just the culture narrative I am most annoyed at.

I look at all these kids glued to their phones and I ask 'Where’s the Frankenstein Complex now that we really need it?"