- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

cross-posted from: https://discuss.online/post/32799122

I have issues with this article, but the point about the environment is interesting.

the author’s Substack bio says “Director of EA DC”

his website explains the acronym - it’s “Effective Altruism DC”

at this point, your alarm bells should start ringing.

but if you are blissfully aware, “effective altruism” is a goddamn scam. it is an attempt by Silicon Valley oligarchs and techbros to wrap “I shouldn’t have to pay taxes” in a philosophical cloak. no more, no less.

take all of his claims about “no bro AI datacenters are totally fine don’t listen to the naysayers” with a Lot’s-wife-sized pillar of salt.

Damn that’s some next level detective work. Thx for the details

Edit: God it just gets worse and worse. I just peeked at the website for his “EA DC” org and their board of directors literally includes an exec from Anthropic. This guy is literally taking money from the AI industry. This article is a complete joke.

Consider mentionning this in the title for those who skip comments.

Maybe “[Likely sponsored by Antropic]”

Author rests the entire argument on ignoring the cumulative effects of individual use on a large scale. Whole lotta writing for such a small idea.

By the same logic, it follows:

IC engines are not bad for the environment.

Consuming meat is not bad for the environment.

A single vote has no effect on the outcome of an election.

One official taking a bribe isn’t a big deal.

pitiful, abject nonsense

I think that a lot of the volume of GenAI queries.are unsolicited. Like google doing one for every search. Ban that and the datacenter volume drops a lot

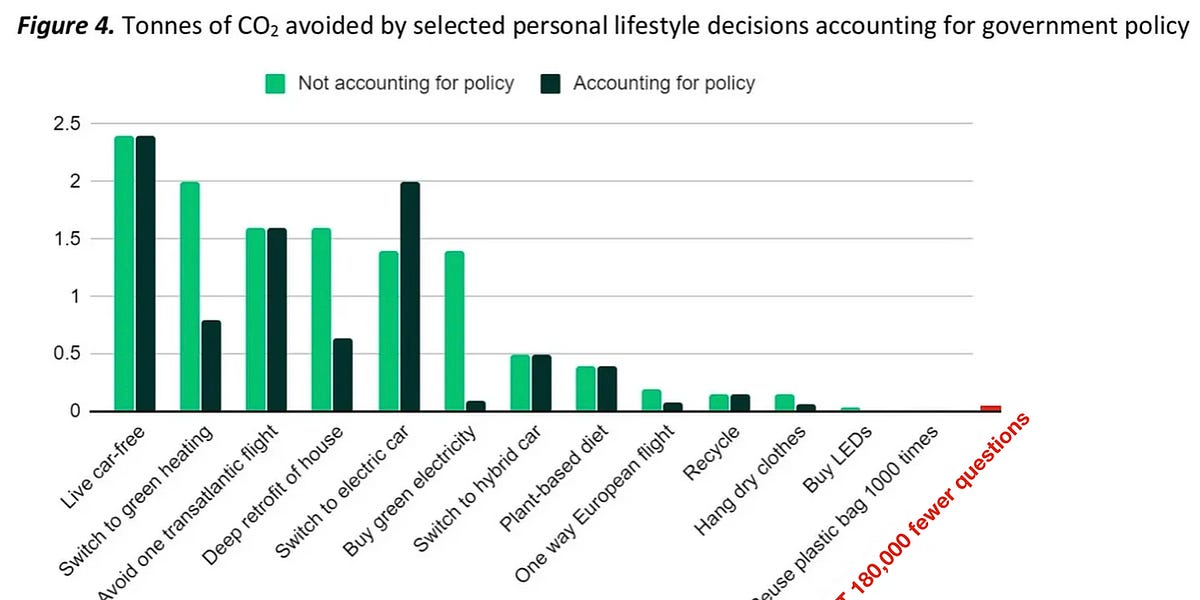

I found the arguments about the environment convincing - he really does a great breakdown and comparison of other, individualist carbon emission sources and clearly explains why one person’s heavy Chatgpt usage is nothing compared to, say, using a laptop for an hour. I still hate Chatgpt and the rest for all the OTHER reasons that we all know by now, but on the environmental point, I felt this article was persuasive. Overly long, but persuasive.

Well, it is true that in the bigger picture there are larger fish to fry, but LLMs are so useless*, so their relative environmental impact feels like an especially stupid and pointless waste.

*I have tried them quite a bit and even ran open models myself, and I am still extremely underwhemed by their actual usefulness. Yes the first impression is cool, and they can write corporate emails well, but beyond that? I can’t help but thinking people like the author of the text (although probably most of that was written by AI) are deluding themselves about the usefulness.

There are of course some narrow usecases like automatic transcription or text translations where modern machine learning is useful, but that stuff can run on a phone now 🤷

More than doubting their usefulness, I wonder whether they’re hurting/slowing people more than they’re helping.

There are study as well as anecdotes of people giving up vibe coding because it takes more effort to review and fix sloppy code than writing it yourself.

I occasionally come accros a well written online tutorial that seems to answer the exact problem I’m trying to solve. And later realize it’s a complete dead end with made up API/function names but it looks really convincing. Those are likely AI-generated tutorials with severe hallucinations and bad/zero disclosure.

I’m now blacklisting whole websites using ublock to avoid wasting more of my time reading that.