- 9 Posts

- 14 Comments

7·1 year ago

7·1 year agoThese people aren’t real nerds.

3·1 year ago

3·1 year agoAs in with Eliza where we interpret there as being humanity behind it? Or that ultimately “humans demanding we leave stuff to humans because those things are human” is ok?

4·1 year ago

4·1 year agoTo be fair the more imaginative ones have entire educational models built around teaching the societally transformative power of bitcoin.

15·1 year ago

15·1 year agoPromptfondler sounds like an Aphex Twin song title.

1·1 year ago

1·1 year agothe truth in the joke is that you’re a huge nerd

Oh absolutely. Yes I think partly my fascination with all of this is that I think I could quite easily have gone the tech bro hype train route. I’m naturally very good with getting into the weeds of tech and understanding how it works. I love systems (love factory, strategy and logistics games) love learning techy skills purely to see how it works etc. I taught myself to code just because the primary software for a particularly for of qualitative analysis annoyed me. I feel I am prime candidate for this whole world.

But at the same time I really dislike the impoverished viewpoint that comes with being only in that space. There’s just some things that don’t fit that mode of thought. I also don’t have ultimate faith in science and tech, probably because the social sciences captured me at an early age, but also because I have an annoying habit of never being comfortable with what I think, so I’m constantly reflecting and rethinking, which I don’t think gels well with the tech bro hype train. That’s why I embrace the moniker of “Luddite with an IDE”. Captures most of it!

35·1 year ago

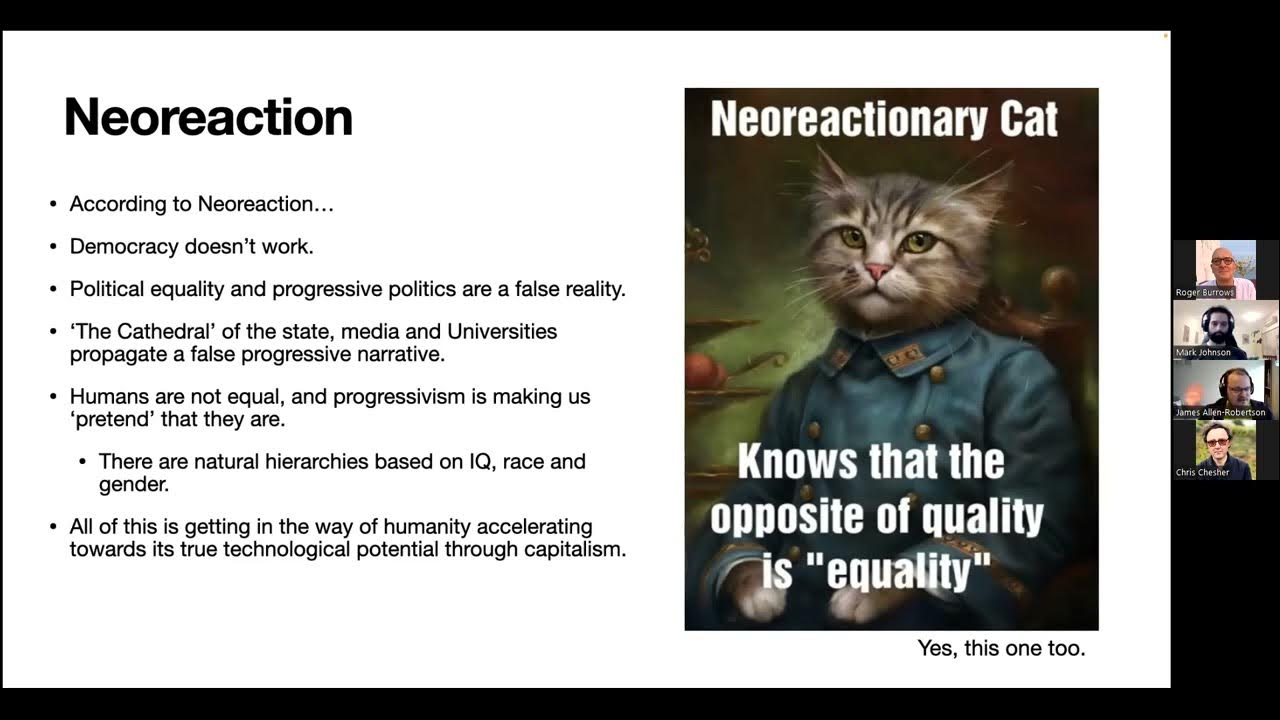

35·1 year agoThe learning facilitators they mention are the key to understanding all of this. They need them to actually maintain discipline and ensure the kids engage with the AI, so they need humans in the room still. But now roles that were once teachers have been redefined as “Learning facilitators”. Apparently former teachers have rejoined the school in these new roles.

Like a lot of automation, the main selling point is deskilling roles, reducing pay, making people more easily replaceable (don’t need a teaching qualification to be a "learning facilitator to the AI) and producing a worse service which is just good enough if it is wrapped in difficult to verify claims and assumptions about what education actually is. Of course it also means that you get a new middleman parasite siphoning off funds that used to flow to staff.

1·1 year ago

1·1 year agoI remember one time in a research project I switched out the tokeniser to see what impact it might have on my output. Spent about a day re-running and the difference was minimal. I imagine it’s wholly the same thing.

*Disclaimer: I don’t actually imagine it is wholly the same thing.

0·1 year ago

0·1 year agoDoes this mean they’re not going to bother training a whole new model again? I was looking forward to seeing AI Mad Cow Disease after it consumed an Internet’s worth of AI generated content.

0·1 year ago

0·1 year agoMy hyper fixation for the last 4 years has been the band Lawrence. Eight-piece Soul Funk group with a brass section and two lead vocalists.

The musicianship is incredible. Saw them live last month and you could tell there was no click track as the band members improvised off each other and the crowd. They were having a genuinely good time on stage messing around and the energy was infectious. Genuinely the most fun I’ve had in years.

Also co-vocalist Gracie’s voice! I’ve heard their albums so many times and there’s still moments I find myself muttering blasphemy as she fucking belts it out.

As I get older my music tastes have definitely broadened from my relatively narrow range of Seattle Grunge and metal. Still with this band, my partner doesn’t quite know what’s happened to me.

Anyway, I recommend this live recording of Hip Replacement from last month.

1·1 year ago

1·1 year agoWho could have predicted that a first principles ground up new Internet protocol based on monarchism would be a difficult sell.

*I mean, I think that’s what Urbit is. I’ve read multiple pieces describing it and I’m still not really clear.

1·1 year ago

1·1 year agoBased on my avid following of the Trashfuture podcast, I can authoritatively say that the “Hoon” programming language relies primarily on Australians doing sick burns and popping tyres in their Holden Commodores.

0·1 year ago

0·1 year agoThere’s definitely something to this narrowing of opportunities idea. To frame it in a real bare bones way, it’s people that frame the world in simplistic terms and then assume that their framing is the complete picture (because they’re super clever of course). Then if they try to address the problem with a “solution”, they simply address their abstraction of it and if successful in the market, actually make the abstraction the dominant form of it. However all the things they disregarded are either lost, or still there and undermining their solution.

It’s like taking a 3D problem, only seeing in 2D, implementing a 2D solution and then being surprised that it doesn’t seem to do what it should, or being confused by all these unexpected effects that are coming from the 3rd dimension.

Your comment about giving more grace also reminds me of work out there from legal scholars who argued that algorithmically implemented law doesn’t work because the law itself is designed to have a degree of interpretation and slack to it that rarely translates well to an “if x then y” model.

0·1 year ago

0·1 year agoI feel like generative AI is an indicator of a broader pattern of innovation in stagnation (shower thoughts here, I’m not bringing sources to this game).

I was just a little while ago wondering if there is an argument to be made that the innovations of the post-war period were far more radically and beneficially transformative to most people. Stuff like accessible dishwashers, home tools, better home refrigeration etc. I feel like now tech is just here to make things worse. I can’t think of any upcoming or recent home tech product that I’m remotely excited about.

It’s truly a wonder where these topics will take you.