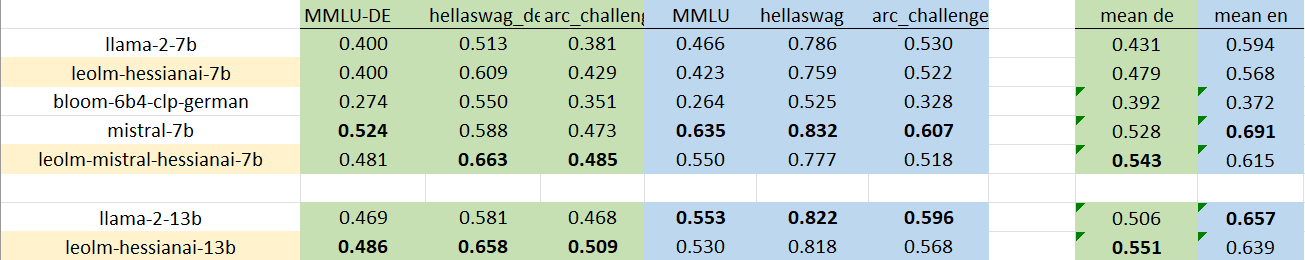

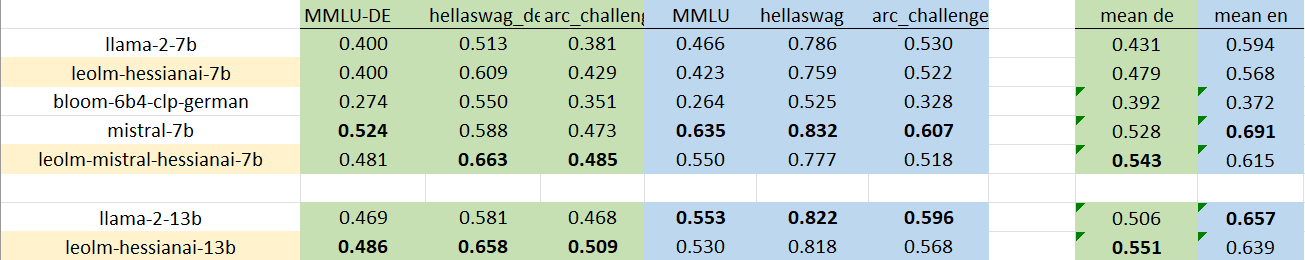

Our models extend Mistral’s capabilities into German through continued pretraining on a large corpus of German-language and mostly locality specific text.

Other fine-tuned models for foreign languages:

Our models extend Mistral’s capabilities into German through continued pretraining on a large corpus of German-language and mostly locality specific text.

Other fine-tuned models for foreign languages:

TL;DR You’re right, pytorch and transformers need more memory.

I will respond to the CPU inference first, for the transformers library.

In transformers, I don’t use quant. :L If you’re used to the Q_4 speed, then it will be slower than that. For a 7B it’s almost okay on CPU.

Yeah, it seems like you use low quant downloads. D: It’s not for you.

But you were on the right track with that 15GB download because you downloaded the raw release. Since not the GPTQ, nor AWS what we use in transformers (for new releases). ^^

That’s why I prefer c/rust code, it just works. It will always be faster whatever HF will release, with or without quant.

Right, C/Rust code is more optimized.

Pytorch w/o Nvidia card is less common >:D That’s how I started.

Imho most github sources release buggy code, they do not set device(‘cpu’) for cpu users. Avoiding dependency hell is a must. I prefer a commented single file, not a complex python project that spits out “bitsandbytes” errors.

So as HF, in their cough code cough. It is likely that the same code in C is also more readable.

The reason I mentioned transformers because this line takes care of new model releases with all the bugfixes, just as ***cpp projects do.

generator= pipeline('text-generation', model='NousResearch/Nous-Capybara-7B') generator('Here is the prompt.')We run out of context?! Fix that. Use rotary embedding.

model = AutoModelForCausalLM.from_pretrained( "NousResearch/Nous-Capybara-7B", rope_scaling={"type": "dynamic", "factor": 2.0}, ) generator= pipeline('text-generation', model=model) generator('Here is the prompt.')Does it eat all your RAM? It does. It just works ™ with fineprint.

How to train with tools? Download another tool! With transformers:

model = AutoModelForCausalLM.from_pretrained( "NousResearch/Nous-Capybara-7B", use_flash_attention_2=True, ) trainer = SFTTrainer( model, train_dataset=dataset, dataset_text_field="text", max_seq_length=512, # context size is 512 ) trainer.train()Does it eat all you RAM? Yup, it goes beyond 64GB.

peft_config = LoraConfig(r=16, task_type="CAUSAL_LM") trainer = SFTTrainer( model, train_dataset=dataset, dataset_text_field="text", peft_config=peft_config )Now it eats less RAM with Lora.