AutoMod caught me on the official thread, I continue my discussion here, on 🤗 lemmy.

I try out some ideas until someone comes up with a better one.

A chat app prototype can listen to other services

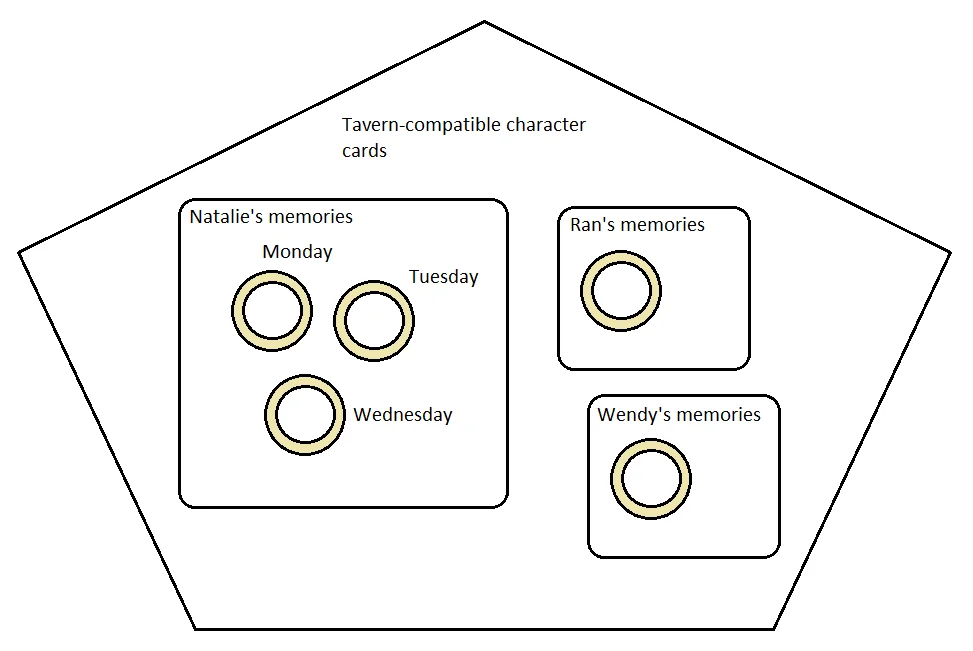

- import tavernai (webp) characters

- save chat log (text file, Trilium note taking tool)

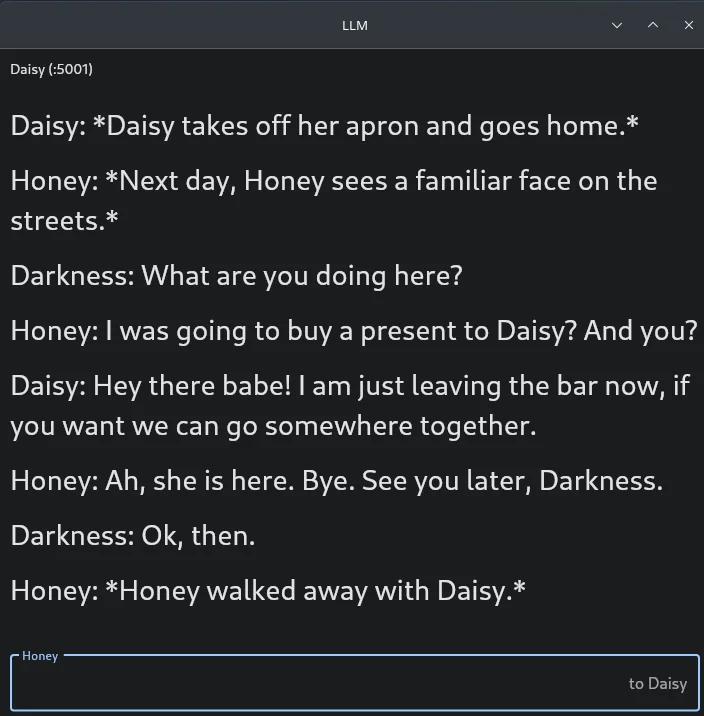

- communicate with different language models at the same time, with different roles (RP, summarization), different sizes (22b, 13b, 7b)

- the free Azure speech api gives voice to the character

- internal Flutter/CLI test interface

Changes since last time

Introduction of summarization and a simplified memory retrieval

Language model: After many disappointments with the capabilities of large language models, I use ten times smaller models to summarize both chat logs and character descriptions.

Characters can be changed at any time, and they can leave the room as the story progresses. The summarization keeps track of such changes. If you talk to person B, the last time you talked to person A won’t be included in the summary unless A is also there.

Pressing the Tab key lets the user choose whom to talk to

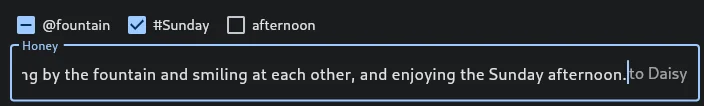

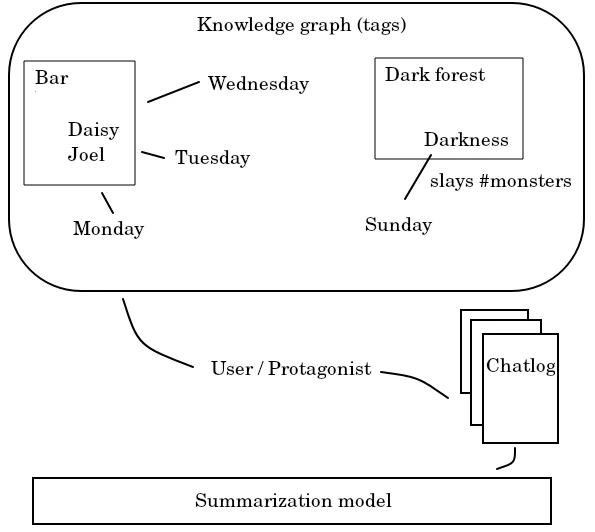

TL;DR before you continue. “During its search (before summarization), the sentence similarity algorithm favors tagged sentences over non-tagged ones in the chat log.”

The chat must somehow track people, their homes, and their belongings in a complex world.

You can decide which elements of the story are important, then mark those words with symbols in your chat response.

Mark @ locations, # time of the day, or # objects in the world

The flow of time is expressed through these tags. (#morning -> #noon -> #evening). If a character goes to sleep at night, it (usually) wakes up the next morning. The chat keeps track of these things in its small calendar, as long as the words are marked.

The tags are necessary because I want to constrain space and time in the message flow. The chat log contains too much information over time, so it would be necessary for the character(s) to forget things unless they were tagged.

Logical consequences

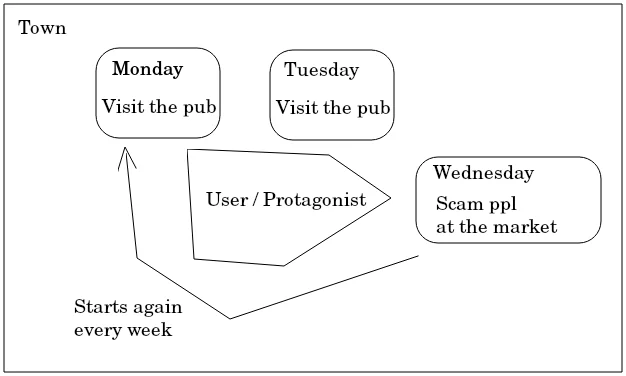

Since the chat log and the characters’ locations are manually tagged, the software is able to track regular actions. E.g. something is done every Wednesday.

Our main character has a steady source of income

Our main character has a steady source of income

I tried many methods, but none of them were reliable

- POS tagging

- SVO analysis

- Ask a smaller model about time, location, state of things (broken, fixed) and people (age, health), time of day, day of week etc.

The manual tags are put into a graph, the software can track changes over time in the graph. If the chat user has days of chat log, the chat can narrow down the search to the days in which the tag is present.

The knowledge base is no longer linear, but rather a connected graph.

Feed the next prompt with the summarized text

Some models are good at role-playing, but they are terrible at multi-bot conversation. It is more challenging to build such systems as compared to a common conversational chatbot.

There is no other complexity in the user workflow, this is still a regular chat app. There are no server requirements to run the chat, besides the lightweight koboldcpp.

Solid approach. Are you just documenting your thoughts here, or is there a github link or something like that?

I’m looking for feedback on whether or not this could be improved. Regarding open-sourcing this, I’d first need some suggestions for an existing (hackable, documented) user interface that I could use to integrate this into. Because that’s how this would become an end-to-end solution. There are some storytelling projects already in development, such as LlamaTale or Mantella, but they put LLM in an existing world, so there isn’t much code I could use from those projects. I’m suggesting something that could be used to create a world while I chat with my characters. I use MoE (mixture of models) for this, but without having to worry about setting up the infrastructure (no separate redis, sql, or vector databases). The chat (flutter) interface above is only for testing, until I find something better.

Ah, alright. I’ve been playing a bit with Python, Langchain, had a look at Microsoft Guidance and vector databases. And tried to implement some companion chatbot that would communicate with me via my favorite chat app. But I dislike many of the libraries I used and learned a few ways not to do it. And I got a bit lost evaluating different models (fine-tunes of llama-based models) and prompt engineering.

It’s quite a complex thing. Let me read your article again and see if I got some constructive thing left to say. Seams you’ve figured most of the stuff out already. And made some decent choices.

Edit: Since you’re mentioning previous posts… Can you link them so we have more context?

Well, judging by your text, you did your research and read the most obvious papers highlighting the design of agents. In case you missed something, related papers I found are:

And whatever I can’t come up with in this exact moment. This isn’t my area of expertise anyways. A probably good summary is here: https://lilianweng.github.io/posts/2023-06-23-agent/

Your characters/agents obviously need a personality and some kind of internal state / memory and a way to reason / predict their response. So I’d agree with your figures. The file format doesn’t really matter.

Summarization/compression is needed because text tokens are a limited resource and we can’t waste them on mundane stuff.

Storing information depends on the kind of knowledge and how you want to retrieve it. You can use a graph, match keywords. Also a vector database is aligned with the way LLMs work and having to deal with unstructured data. Or just progressively summarize stuff and let older memories degrade. You can combine these approaches.

So most of the things you mention are needed and I don’t see any better alternative.

I didn’t get how the tagging, tracking of things and prioritization works. Especially not how you retrieve that information later. Your hashtags seem like keywords that are the keys of your database. It’s a creative idea to do it this way. I didn’t think of doing that. One thing you can’t do that way is query information like: “Remember the last time we went hiking?” But most things can probably be queried by a place or a person. You could also just hand everything over to a vector database and hope it returns the correct things when needed. Or, better, let your LLM help you with processing and structuring the information. Have a look at the Generative Agents paper and their GitHub page with the demo. Those agents reflect and process memories before storing them in their memory. They do way more than summarization and then store that. If you need to pay attention to latency, don’t do it after each chat message. One idea would be to let it reflect on what happened and process the memories during the night for example, when your server isn’t in use. You can then strip that from the current chat history and put that summary into your database / long-term memory. You can also try and ask your LLM to tag sentences for you.

I’m not sure if it’s really necessary to forget everything about a person while they’re absent. It’s not how the real world works. But your mileage may vary. I’m sure this solves a few issues.

Speaking of the papers: I tried to take some inspiration from the Generative Agents, AutoGPT and BabyAGI. I fiddled around a bit with the LangChain implementations of those. But, I had quite some issues. I think those 33b parameter Llama models aren’t the same as ChatGPT or GPT4. A lot of scientific results from those papers can’t be transferred one to one to what we’re doing here. At least that’s my experience. Also those smaller models aren’t super intelligent, so you need to get your prompts right. Many things don’t work well the way they’re done in LangChain for example if you’re not using ChatGPT.

But I think you can take some inspiration from LangChain. And SillyTavern has examples for prompts specific to roleplay/dialogue. And interesting extensions (SillyTavern-extras) you can have a look at.

If you write your code modular, you can try a few things and swap in and out modules and different ways of storing memories etc.

I also had to waste some time until I found out that using a Llama model for summarization isn’t a good choice. Same applies to embeddings. Use something that is specifically made for the task and it’ll work way, way better. (Or even at all.)

Regarding the UI: Idk. I’d just take some web framework I like or am familiar with. Kinda depends on the programming language. I took some integration to talk to my chatbot via chat. But that turns out not to be the best idea. A chat app doesn’t have features like regenerating the last answer or giving a feedback, or editing their reply. All things that are pretty useful and available in all the proper LLM frontends.

Previous posts have been uploaded in pdf format, which can be read online without downloading them first. https://docdro.id/UlaU7wy Multi-bot chat log is stored in a single file/note, that’s the only change since then.