https://titotal.substack.com/p/diamondoid-bacteria-nanobots-deadly

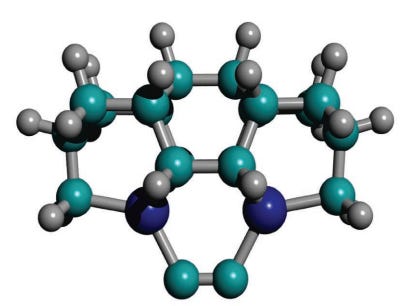

I wrote this article a month or two ago, thought people here might be interested. Drexler-style nanotech research appears to be effectively dead at the moment.

Oh, and Yudkowsky responded to the article with characteristic obliviousness:

I broadly endorse this reply and have mostly shifted to trying to talk about “covalently bonded” bacteria, since using the term “diamondoid” (tightly covalently bonded CHON) causes people to panic about the lack of currently known mechanosynthesis pathways for tetrahedral carbon lattices.

FWIW the “reply” that Yud “broadly endorse[s]” is the top comment:

https://forum.effectivealtruism.org/posts/g72tGduJMDhqR86Ns/diamondoid-bacteria-nanobots-deadly-threat-or-dead-end-a?commentId=T5FNEgeCCXFjGMsbN

I read his comment in the original post and thought he was endorsing your article, which, for a moment, made me think Yud had a little more magnanimity than I’ve seen before.

RE: covalently bonded bacteria. Here’s evidence of how Yud is joking: he understands bacteria as well as I do, which is not at all. However, I know that bacteria can be spooky, so if I were Yud trying to make a joke about bacteria, I’d google for developments in bacteria research and pick some phrase that stood out.

Bonus: I love the person in the comments who is effectively shouting, “A MILLION SUPERINTELLIGENCES! NANOTECH POSSIBLE! DEBATE ME! I’M VERY SMART!!!” into the void at this point.

Light LEAKS through the CRACKS. My mind is BRIGHTER than it EVER was. THE HIGHER I RISE THE MORE I SEE.

then again look at the way these people actually talk:

the Cultist Simulator quote is significantly more sane than “nah I disagree, a million AI superintelligences can just poof their way into existence regardless of magical nanotech, infrastructure, the speed of light, or any other physical limitations on the amount of compute we can practically fit into one space”

e: fucking “you’ll see”, these people are convinced the rapture is almost here

There should be a cultist simulator mod where the principles are the TREACLES components. Expeditions are deep dives where you fend off scientific consensus to find contrarian blog posts as relics. Followers can take damage from information hazards and must be healed by adderall or nootropics.

…fuck, I like this so much I might look up what it takes to mod cultist simulator later today

One of the languages you can learn is “incorrect japanese”

It is a tragedy how these people only know to understand the world through “who’s stronger superman or goku???”

but who is tho

I believe the standard answer is that Superman would initially defeat Goku, but that would only make Goku stronger.

edit: Sorry I mean, there is a 96% chance that Superman would initially defeat Goku. However, this would increase Goku’s power by several orders of magnitude, increasing his chances of winning. Each subsequent fight that Goku loses would increase his power recursively, which can be modelled as f(x) = a * 10^(dx). In the limit, Goku wins.

Prior belief: Superman has a 96% chance of defeating Goku.

Evidence examined: According to TVTropes [1], when Goku is defeated he becomes stronger.

Update to belief: It is reasonable to infer that Goku will become infinitely strong. Further, if the conflict takes place one or more years from the present, Goku is likely to have access to an artificial intelligence which either itself defeats Superman or which advises him in effectively defeating Superman. I therefore now assess that Goku has a 95% chance of winning.

the more times you defeat goku, the more likely it is you’ll babysit his kids when he finally wins

a variation on the fast and furious principle

@sc_griffith @Amoeba_Girl Goku’s Basilisk

Pfft. Real heads know that the only way to figure this out is with a prediction market