- cross-posted to:

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

Google is embedding inaudible watermarks right into its AI generated music::Audio created using Google DeepMind’s AI Lyria model will be watermarked with SynthID to let people identify its AI-generated origins after the fact.

People are listening to AI generated music? Someone on Bluesky put (paraphrased slightly) it best-

If they couldn’t put time into creating it I’m not going to put time into listening to it.

I think I’d rather listen to some custom AI generated music than the same royalty free music over and over again.

In both cases they’re just meant to be used in videos and stuff like that, you’re not supposed to actually listen to them.

Fun fact: Steve1989MREInfo uses all of his original music for his videos.

deleted by creator

Sam with Geowizard. actually quite a few “big” channels do which is awesome

People are using AI tools to do crazy stuff with music right now. It’s pretty great

Human performance but AI voice: https://www.youtube.com/watch?v=gbbUWU-0GGE

Carl Wheezer covers: https://www.youtube.com/watch?v=65BrEZxZIVQ

Here is an alternative Piped link(s):

https://www.piped.video/watch?v=gbbUWU-0GGE

https://www.piped.video/watch?v=65BrEZxZIVQ

Piped is a privacy-respecting open-source alternative frontend to YouTube.

I’m open-source; check me out at GitHub.

You tell 'em, bot. 🙌🏽

Can it be much different from the mass-market auto-tuned pap that gets put out today?

The singers of that music actually have to use their voice to sing into a mic compared to someone on a computer typing in a prompt.

As much as I dislike modern pop music, I will definitely say they put in more work than the people who rely solely on an AI that will do all the work based on a prompt.

My own feelings on the matter aside (fuck google and all that) this has been something chased after for a long time. The famous composer Raymond Scott dedicated the back end of his life trying to create a machine that did exactly this. Many famous musical creators such as Michael Jackson were fascinated by the machine and wanted to use it. The problem was is he was never “finished”. The machine worked and it could generate music, it’s immensely fascinating in my opinion.

If you want more information in podcast format check out episode 542 of 99% invisible or here https://www.thelastarchive.com/season-4/episode-one-piano-player

They go into the people who opposed Scott and why they did, and also talk about the emotion behind music and the artists, and if it would even work. Because the most fascinating part of it all was that the machine was kind of forgotten and it no longer works. Some currently famous musicians are trying to work together to restore it.

The question then is, if someone created their life’s work and modern musicians spend an immense amount of time restoring the machine, when the machine creates music does that mean no one spent time on it? I enjoy debating the philosophy behind the idea in my head, especially since I have a much more negative view when a modern version of this is done by Google.

I feel like the machine itself would be the art in that case, not necessarily what it creates. Like if someone spent a decade making a machine that could cook FLAWLESS BEEF WELLINGTON, the machine would be far more impressive and artistic than the products it made

i mean, where do you draw the line necessarily between the machine and what it creates? the machine itself is totally useless without inputs and outputs, not to say art needs utility. the beef wellington machine is only notable on its ability to conjure beef wellington, otherwise it’s just a nothing machine. which is still kind of cool, I guess, but the beef wellington machine not making beef wellington is kind of a disregard for the core part of the machine, no?

That was a great episode of 99PI. Would love the machine restored.

IIRC, It’s not so much that it made music, but that it would create loops through iteration to inspire people. He wanted it to make full busic but it was never close to that

Yeah I think you’re right, and it was apparently actually random. The longer it would play a loop the more it would iterate. Such a cool thing to exist

You will still listen to it, watching movies, advertisements, playing video games…

This is the worst timeline

This is the worst time line so far.

Not yet.

Ok, boomer.

How’s that microwave dinner taste? Like an A for effort? Yeah, I bet.

deleted by creator

deleted by creator

A spectrum analysis and bandpass filter should take care of that.

chuckles contemptfully in Audacity

So we’ll just need another AI to remove the watermarks… which I think already exists.

Don’t even need AI. Basic audio editing works.

Lately in youtube I’m constantly been bombarded with ai garbage music passed as a normal unknown bands and it’s getting really annoying. What will happen when there’s an actual new band but everyone ignores them because you would think it’s just ai?

ai garbage music

actual new band but everyone ignores them because you would think it’s just ai

I think you answered your own question.

Omg the AI voice describing a short is infuriating.

“This man was minding his own business not knowing he was about to change this child’s life…Watch how his interaction is measured…”

Dots Do not recommend this channel again

This raises the question of will AI style be the next big trend? Imagine if real painters started painting oil paintings that look uncanny and surreal like an Ai generated art, weird hands, or weird eyes. Imagine if a real quartet decided to play an AI generated piece of music.

The Audacity!

Hehe.

This is the best summary I could come up with:

Audio created using Google DeepMind’s AI Lyria model, such as tracks made with YouTube’s new audio generation features, will be watermarked with SynthID to let people identify their AI-generated origins after the fact.

In a blog post, DeepMind said the watermark shouldn’t be detectable by the human ear and “doesn’t compromise the listening experience,” and added that it should still be detectable even if an audio track is compressed, sped up or down, or has extra noise added.

President Joe Biden’s executive order on artificial intelligence, for example, calls for a new set of government-led standards for watermarking AI-generated content.

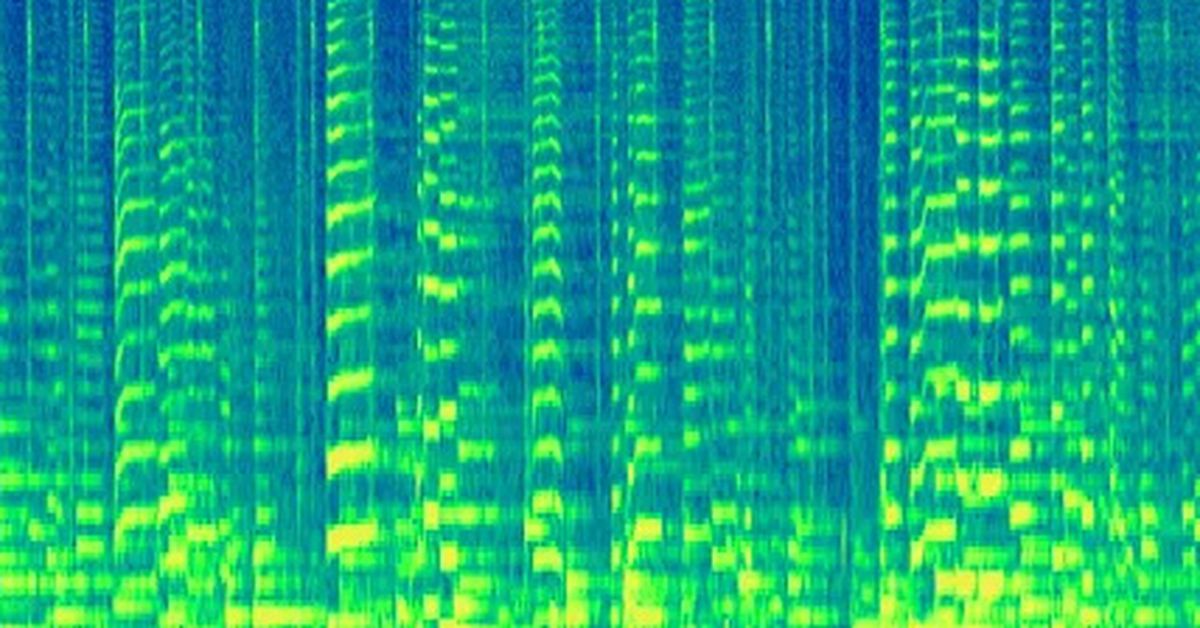

According to DeepMind, SynthID’s audio implementation works by “converting the audio wave into a two-dimensional visualization that shows how the spectrum of frequencies in a sound evolves over time.” It claims the approach is “unlike anything that exists today.”

The news that Google is embedding the watermarking feature into AI-generated audio comes just a few short months after the company released SynthID in beta for images created by Imagen on Google Cloud’s Vertex AI.

The watermark is resistant to editing like cropping or resizing, although DeepMind cautioned that it’s not foolproof against “extreme image manipulations.”

The original article contains 230 words, the summary contains 195 words. Saved 15%. I’m a bot and I’m open source!

it does this by converting the audio into a 2d visualisation that shows how the spectrum of frequencies evolves in a sound over time

Old school windows media player has entered the chat

Seriously fuck off with this jargon, it doesn’t explain anything

That’s actually an accurate description of what is happening: an audio file turned into a 2d image with the x axis being time, the y axis being frequency and color being amplitude.

That’s literally a spectrograph

Spectrogram*

Your mom’s literally a spectrograph.

Sounds like a bad journalist hasn’t understood the explanation. A spectrogram contains all the same data as was originally encoded. I guess all it means is that the watermark is applied in the frequency domain.

Also this isn’t new by any stretch… Aphex Twin would like a word

Well, encoding stuff in the spectrogram isn’t new, sure. But encoding stuff into an audio file that is inaudible but robust to incidental modifications to the file is much harder. Aphex Twin’s stuff is audible!

I would like to know what it is that makes it so robust. The article explains very little. Is it in the high frequencies? Higher than the human ear can hear? Compression will effect that plus that’s going to piss dogs off. Could be something with the phasing too. Filters and effects might be able to get rid of the water mark

I don’t know what frequencies are annoying for dogs but I’m guessing it’s above 24kHz so no sound file or sound system is going to be able to store or produce it anyway.

There will certainly be some way to get rid of the watermark. But it might nevertheless persist through common filters.

I wonder if being able to generate music will make people less interested in actually bothering to learn how to do it themselves. Having ai tool makes many things so much easier and you need to have only rudimentary understanding of the subject.

Yeah, like most people don’t realise but until about 1900 most piano music was played by humans, of course there were no pianists after the invention of the pianola with its perforated rolls of notes and mechanical keys.

It’s sad, drums were things you hit with a stick once but Mr Theramin ensured you never see a drummer anymore, while Mr Moog effectively ended bass and rhythm guitars with the synthesizer…

It’s a shame it would be fun to go see a four piece band performing live but that’s impossible now no one plays instruments anymore.

People are never going to stop learning to play instruments, if anything they’ll get inspired by using AI to make music and it’ll get them interested in learning to play, they’ll then use ai tools to help them learn and when they get to be truly skilled with their instrument they’ll meet up with some awesomely talented friends to form a band which creates painfully boring and indulgent branded rock.

I believe it will depend on a couple different factors. Putting keywords into a generator isn’t the same as laying your hands on an instrument, being able to physically play it yourself. However, if the result is so perfect and beautiful that a person could have never possibly come up with it on their own, it might be discouraging (but I can’t really see that happening)

Maybe but people who are good at things already can use it as a tool to be better. You can combine the skills you do have with ai for the skills you don’t have to make something you never could have before.

I like to make games and for me this means I could make my own game music. I just don’t have the skills to do that on my own and make it sound good. But with ai I could get music that matches the quality of my other work.

thats like putting a watermark besides the Bill.if it is inaudible then you can just delete it

So basically it’s security through obscurity, since once people know they can and will edit it out, especially those who want to use it for deception.