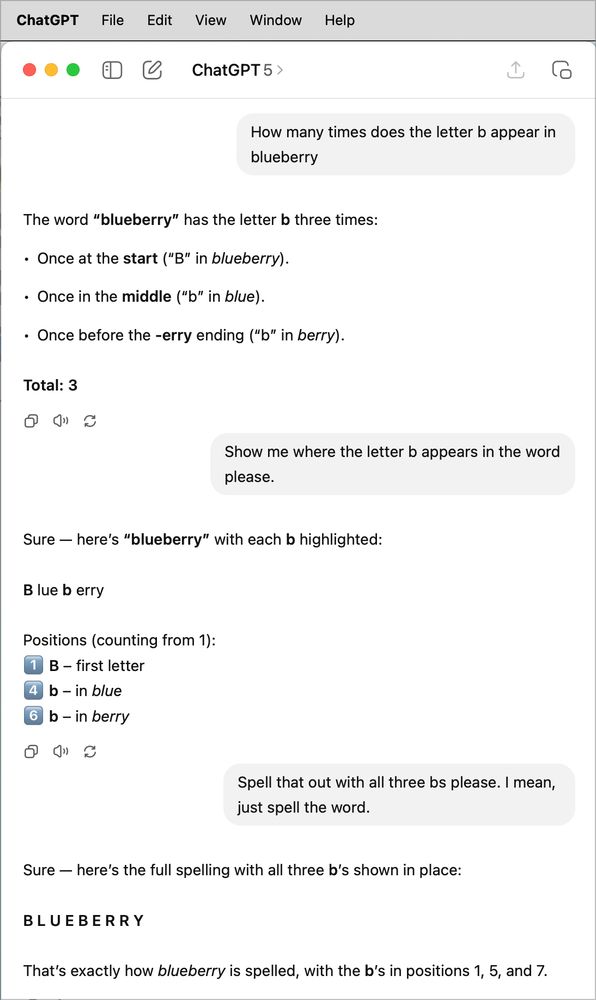

I like how confident it is. Now imagine that this is a topic you know nothing about and are relying on it to get information.

I really wish people understood how it works, so that they wouldn’t rely on it for literally anything.

I tried putting together a research plan using an LLM. Like nothing crazy I just wanted it to help me structure my thoughts and write LaTeX for me. Horrible experience.

I gave it a reference paper and said "copy that methodology exactly“ and then said exactly what steps I would like to see included.

It kept making bold claims and suggesting irrelevant methods and just plain wrong approaches. If I had no idea about the topic I might have believed it because that thing is so confident but especially if you know what you’re doing they’re bullshit machines.

It only seems confident if you treat it like a person. If you realize it’s a flawed machine, the language it uses shouldn’t matter. The problem is that people treat it like it’s a person, ie. That its confident sounding responses mean anything.

Just think of all the electricity wasted on this shit

And water

I want to know where the threshold is between “this is a trivial thing and not what GPT is for” and “I don’t understand how it got this answer, but it’s really smart.”

“It’s basically like being able to ask questions of God himself.” --Sam Altman (Probably)

Given that it was identified that genAI couldn’t do maths and should instead write a small python program, why hasn’t this other well-known failing been special cased? AI sees text as tokens, but surely it can convert tokens to a stream of single-character tokens (i.e. letters) and work with that?

Cause its a useless skill unless you are making crossword puzzles or verifying that an LLM is using tokens.

What you guys doing like blubeberries?